Mantic Monday 12/4/23

Multiple Alt-ernatives

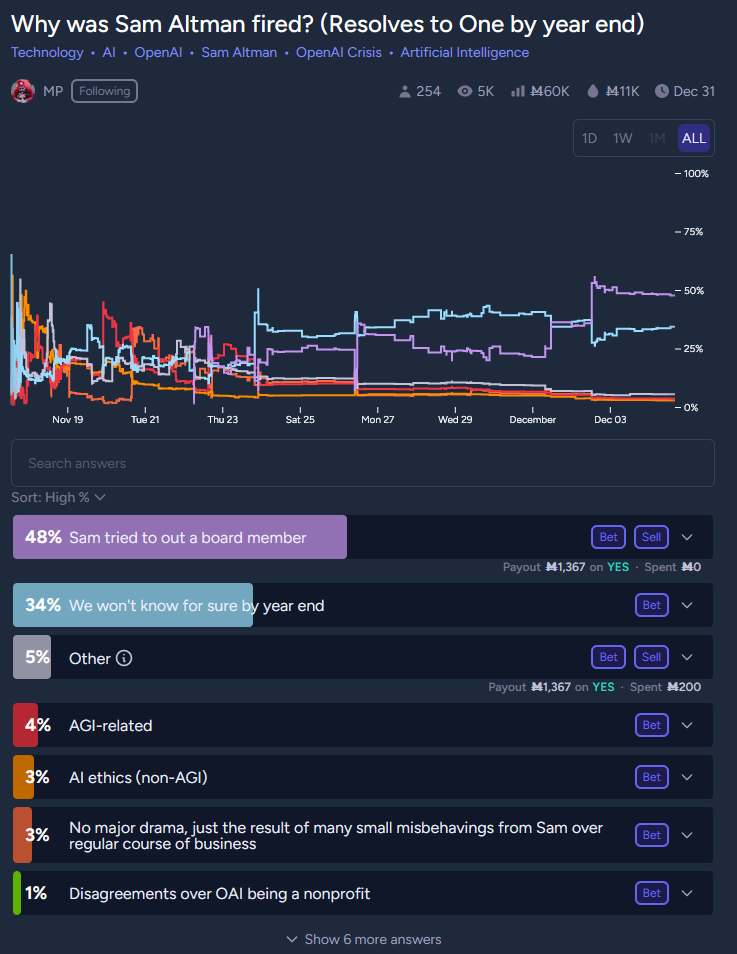

Source: Older version of this market.

Source: Older version of this market.

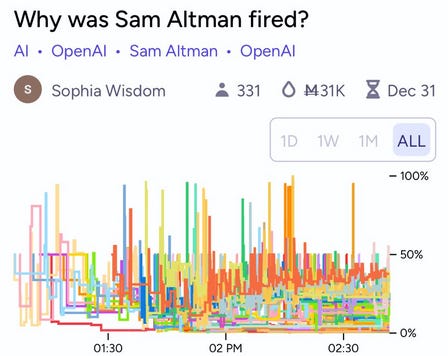

People joked about this graph showing how crazy the OpenAI situation was. The situation might have been crazy, but that’s not the lesson of this graph. The lesson is: it’s hard to design prediction markets for “why” questions.

The creator started by letting people add their own options. But users didn’t always check to see if their option already existed, or added options that were only slightly different from other options (eg “for being dishonest” vs. “for being manipulative”). The result was a free-for-all that told us nothing.

At some point the market cleared up - I don’t know if this was an intervention or people just converged on a few answers. Now it looks like this:

All five of the top answers (except “we won’t know”) tell basically the same story; the board thought Sam was manipulating them to get rid of Helen Toner.

The good: the market has reached a firm conclusion. The bad: it’s spread across multiple framings and the graph is meaningless. The creator has promised to resolve all true answers as true, but this incentivizes answer creators to be maximally vague (earlier someone put “Altman was not consistently candid in his communications”, the non-explanation the board gave - it was disqualified by fiat).

Probably the right way to do this is for the creator to pick a few mutually exclusive answers (including Other) and not let people add any more. But if the story drifts from where it was when the creator made the market, this might mean all active possibilities are lumped together in the Other category, making the market worthless. Either the creator can fix this themselves, or it’s time to make a new market.

Here’s another attempt at the same question:

Two things I like more about this market: first, the creator made mutually exclusive answers. Here we see much more clearly that everyone with a strong position things it’s because Sam tried to oust a board member, and not (for example), because the company discovered AGI or something else.

Second, the market “resolves to 1”. I think this means that if the creator believes that Altman’s firing was 90% due to ousting a board member, but 10% AGI related, they can assign those answers 90% and 10% credit respectively, and the people who bid them closest to 90% and 10% will profit most.

Here are some other (attempted) OpenAI related markets:

I appreciate how this started in September, shows Altman’s sudden-firing, the first plan to unfire him, the falling apart of the first plan to unfire, and then the second, successful plan to unfire him.

The only problem is that here it looks like the probability gradually declined from October to late November, whereas on the Manifold site itself it’s clear that it was steady during that time and then collapsed on the day he was fired. I think this is a bug in the embed.

There was a Reuters story that the firing was precipitated by a breakthrough in a model called Q, which had learned to do math. The market seems to think Q exists, but is not a breakthrough, and wasn’t involved in the firing.

Despite Ilya Sutskever’s apparent concerns about the company (and Altman’s likely concerns about Sutskever), the markets think he’ll stay on.

…but not continue to lead the Superalignment team? I’m confused by this; why wouldn’t he? Related:

This is an interesting mix of “what will happen?” and “what will a company claim happened?”

This was a rumor. I might have tried this if I found myself in charge of OpenAI, all my employees were about to leave, and I wanted to leverage the situation into some kind of improvement in AI safety (safetyists generally believe the fewer leading labs, the better). But the market seems to think they didn’t actually try it. Related:

Back when OpenAI was a nonprofit for the benefit of humanity, they agreed that once they got close to superintelligence, they would merge with any other labs that were also close to superintelligence (and willing to merge with them) so that everyone would be combining efforts to align superintelligence together (instead of competing in a race). This is in their charter, and the board (if the board remains aligned with the nonprofit mission) has to support it.

This would be pretty crazy if it ever happened, but the last board seemed to take their not-just-a-usual-corporation status seriously, and maybe the new board will too. “By end of 2026” takes this already-pretty-crazy plan and imports some even-crazier assumptions about how fast things go.

Still, I’m heartened to see people are still thinking about this and taking it seriously.

The creator clarifies: “I will resolve this market based on whether the board’s initial decision to fire Sam Altman set off a chain of events that ultimately reduced/increased AI risk. So if Sam returns to OpenAI, this market would NOT resolve as N/A.”

I’m not sure how to think about this. The (very strong) pessimistic case is that everything is back to square one, except that AI companies have been radicalized against EAs and safetyists.

The (weaker) optimistic case I can come up with is that, absent firing Sam, Sam would have succeeded in seizing control of the board and removing the nonprofit element, turning OpenAI into an unconstrained, full-speed-ahead for-profit company. Instead the board successfully kicked Altman off and rebooted with a new board that will have some safetyists or at least socially-minded nonprofit people. Maybe Jonas will choose to resolve by comparing this okay result to a worse counterfactual where Sam had seized total control of the board, and decide risk was reduced.

I think this is probably what the board thought they were doing, they did succeed at their end goal, but the big PR defeat outshone the ambiguous business victory. I don’t understand why the board didn’t put more work into having PR, and I’m still curious for the full story.

The crisis ended with the appointment of three mutually agreeable board members (Larry Summers, Bret Taylor, Adam D’Angelo), who are supposed to seed a new full board to be picked later. I can’t find a market for who will be on this new board exactly. This one is for who will be on the board as of 1/1/24. Most forecasters don’t expect the new board to have been picked by then, but the few who do can give us some information about who they expect to be on it.

I’m most unsure about whether Adam D’Angelo is a committed safetyist. He hasn’t said so explicitly and isn’t openly linked to the safetyist movement. But he’s displayed a lot of awareness of the arguments, and he joined the coalition to fire Altman.

If D’Angelo is a safetyist, you could think of the compromise as follows: D’Angelo, a safetyist, picks two other safetyist board members. Bret Taylor, a profit-seeking Silicon Valley investor, picks two other profit-seeking Silicon Valley investors. And Summers, a random person who probably vaguely agrees with the idea of a nonprofit for the public good but doesn’t have strong ideological opinions, picks two other such people to be tiebreakers. This seems like the makings of a good mutually-agreeable solution.

Or it could have nothing to do with that. Maybe it wasn’t a Machiavellian factional decision at all. Bret Taylor was the head of the Twitter board that negotiated with Musk; people think he did a good job. He might just be “generic person who’s good at boards”. Larry Summers is also a generic person who’s probably good at boards. Adam D’Angelo could credibly claim to be the most moderate (neither explicitly an Altman pawn nor explicitly a safetyist) person on the last board, and if they were going for a board of moderates, he could be there to provide continuity.

Forecasters seem to lean toward the second hypothesis; at least I don’t see any big safety proponents on here (except Emmett, who I doubt is really being considered). Fei-Fei Li is an AI ethics person, but the kind who spends time sniping at alignment people even though they’re her natural allies and desperately want to help her. These people always do well for themselves, and I’ve bet her up. Most of the others are Silicon Valley businesspeople of one sort or another.

Oh, and I almost forgot:

Manifold Love: One Month Progress Report

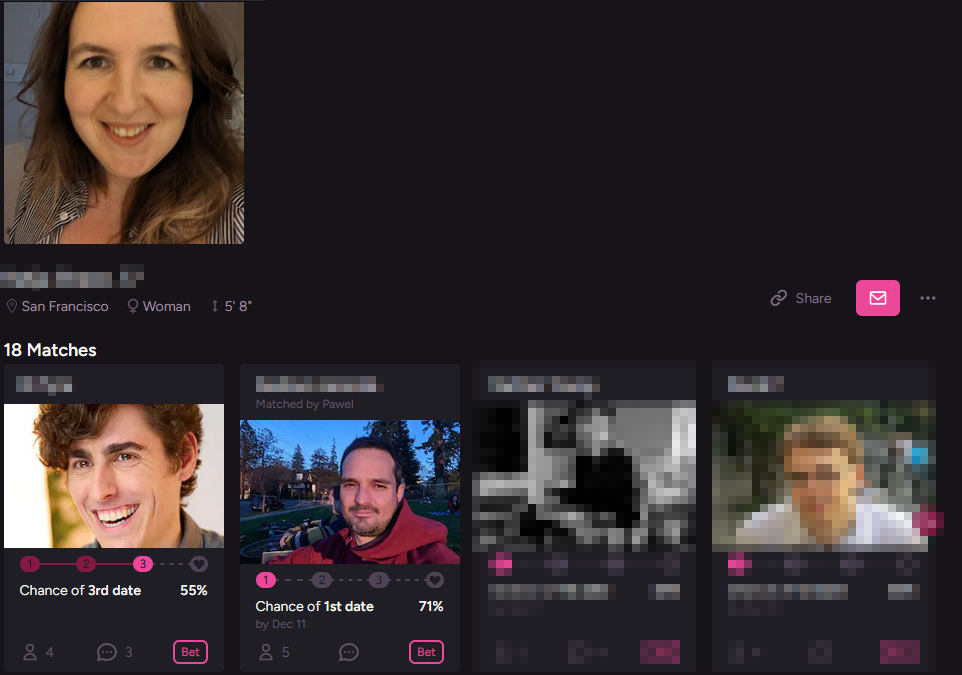

A month ago, Manifold founded a dating site, manifold.love. The idea is, you bet on who would be a good match, and make (play) money if they end up having a second date or continuing on to a relationship.

To my surprise, this not only hasn’t collapsed, but has attracted people outside the usual prediction market community:

This will be a total unfair stereotype, and you should feel free to yell at me for it, I just think usually prediction market junkies don’t have hair as stylish as the woman in Picture #3.

This will be a total unfair stereotype, and you should feel free to yell at me for it, I just think usually prediction market junkies don’t have hair as stylish as the woman in Picture #3.

Are some of these people normies? Seems surprising, but I can’t rule it out. And there are hundreds of them!

And people seem to be using the betting functionality! Here’s James (the founder of the site, so I feel okay showing 50,000 people his dating profile for an example).

You can see that Austin (his co-founder) has proposed a match with the first woman on the left, and two people have bet that there’s a 16% chance they’ll go on a first date.

(I can see an argument for starting with the conditional - “if you go on a first date, you’ll like it enough to go on a second” - but the site prefers it this way, maybe as a way of saying “if you start a conversation, you’ll like each other enough to go on a first date”).

Does James only have all these matches because he’s the founder? Do ordinary people have friends who will put in this much work to play matchmaker (and bet on it?). My impression is that the Bay Area rationalist social scene members who all know each other well in real life have all matchmade their friends, and the normies are having worse luck. I can’t tell if this is because they don’t have friends on the site, or because they haven’t filled out their profile with any information for matchmakers to go off. There are some bots and Good Samaritan matchmakers gradually going through random people’s profiles and matching them up with each other, though not with any consistency.

All of this, so far, seems informal and motivated by enthusiasm. The market function barely works. For one thing, volume is so much lower than on regular-Manifold that it’s not worth money-seekers’ time; the market for James + the first woman in the picture has a total of ℳ32, compared to ℳ1,700,000 in the “why was Sam Altman fired” market cited above.

More important, there’s rampant insider trading. If you look more closely at the market for James + one of these women, 100% of YES shares are held by . . . the woman in the picture.

This actually might be Manifold.love’s killer app. I talked to a user who said their favorite thing about the site was the ability to low-key plausibly-deniably flirt with other users. You buy a couple YES shares in you + them. They see you’re interested and either buy a couple of YES shares themselves, or leave it alone, or buy some NO shares. Then if you both buy YES, you both keep bidding it up until whatever value makes you feel comfortable sending them an intro message.

It seems to be working . . .

…in that some people are already on predictions for their third date. What’s the prior for a two-date relationship reaching date three?

Also, I notice that the second man here gets a probability of 71%, even though both the woman and the man have bets on YES. Is this free money? I think you have to factor in the chance that two people who both want to date each other manage to schedule something before the December 11 deadline.

Manifold.love has also introduced OKCupid-style “compatibility questions”. They don’t seem to involve calculating a match percent yet AFAICT, but hopefully soon!

Metaculus’ “Multiple Major Advances”

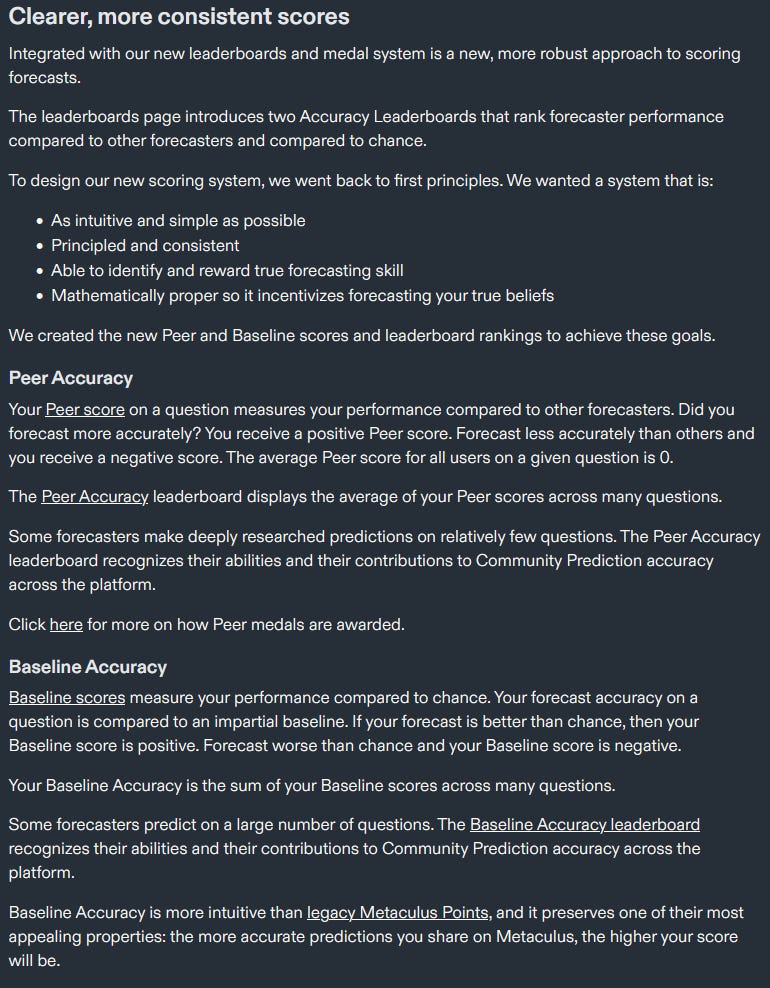

Metaculus announces “multiple major advances to the Metaculus platform”, especially “new scores, new leaderboard, new medals”.

New Scores

In 2021, I wrote about a controversy over Metaculus’ scoring rules:

Everyone agrees Metaculus’ scoring rule is “proper”, a technical term meaning that it correctly incentivizes you to choose the probability you think is true. Zvi and Ross’s objection is that it doesn’t correctly incentivize you about whether to bet at all, or how much effort to put into betting.

For example, on many questions, you can make guaranteed-positive bets - you’ll gain points on the prediction even if you were maximally wrong. If you were trying to maximize your Metaculus points, you would bet on all of these questions. If you were trying to maximize your Metaculus points in a limited amount of time, you might even bet on them without investigating at all. The person who spends one second picking a random number on a thousand questions will get more points than someone who spends an hour researching a really good answer to one question.

(the 2021 post includes some responses and reasons that this might not be as bad as it sounds; see here for details)

No scoring system will simultaneously have both 1. incentivizing you to forecast as many questions as possible, and 2. measuring only your skill, not your free time. So in order to have its cake and eat it too, Metaculus has divided its score into two scores:

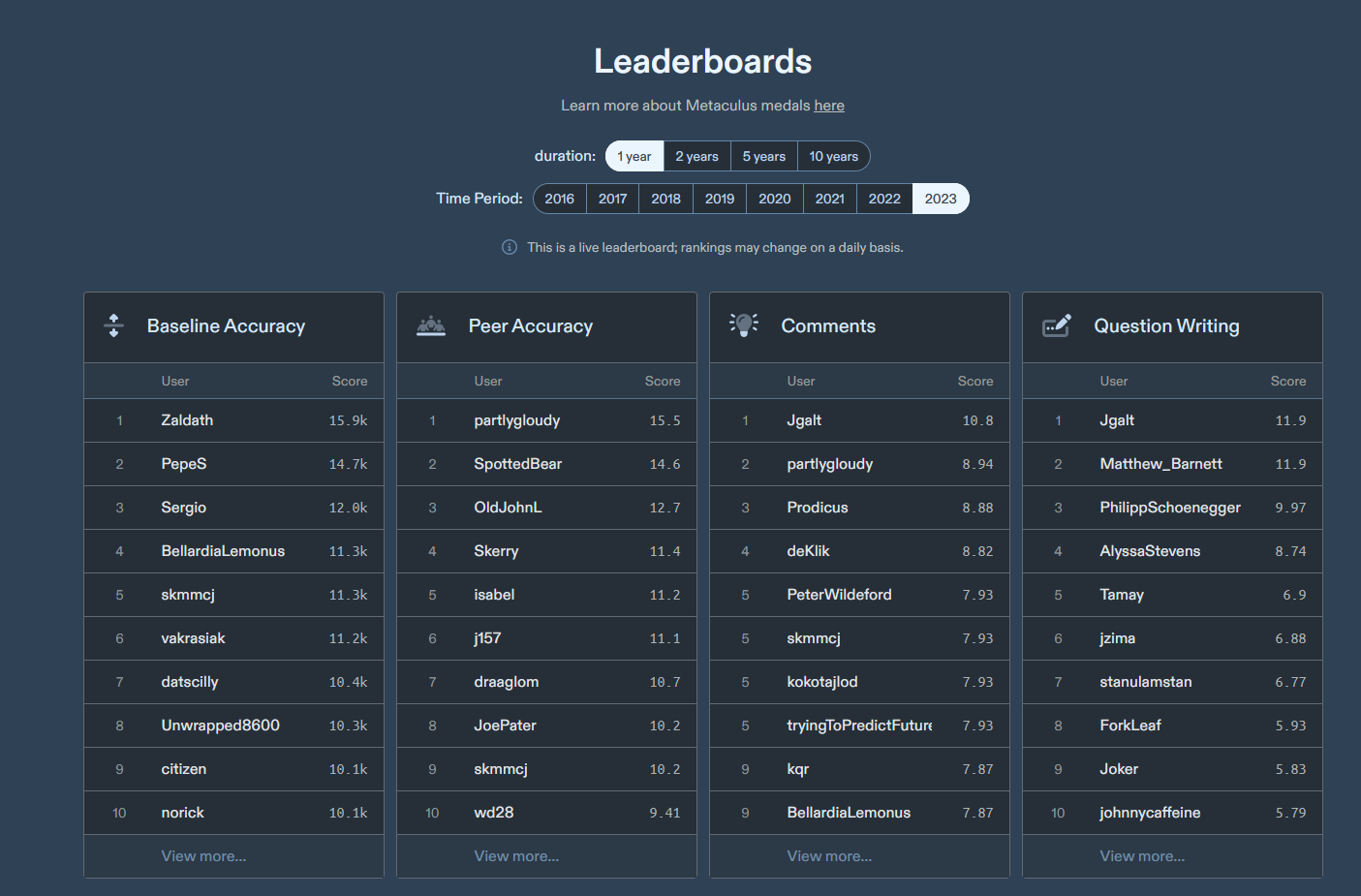

New Leaderboard

…looks like this:

The new Baseline Accuracy and Peer Accuracy scores have different leaderboards, and people are also rewarded for writing good questions and comments. The comments board is based on an h-index - most famous in measuring scientific publications. H-index equals 1 if you have at least 1 comment with 1 upvote, equals 2 if you have at least 2 comments with 2 upvotes, and so on. So does Jgalt have 10.8 comments with 10.8 upvotes? No - you can read about fractional h-index here (it’s pretty much what you’d expect - he might have 10 comments with 11 upvotes each and one with only 10 upvotes, so he’s doing better than h-index 10 but not quite at 11).

New Medals

This is what you’d expect - you get a medal for making it onto the leaderboard, including tournament-specific leaderboards. Top 1% of users get gold medals, top 2% get silver, and top 5% get bronze.

You can read more about recent Metaculus updates at the announcement page, the new scoring FAQ, and the new medals FAQ.

This Month In The Markets

Venezuela is threatening to annex (and invade) Guyana. Here’s what forecasters and markets think:

Related: in case you’re wondering how much Biden’s latest Venezuela deal is worth:

Speaking of South America, libertarian and dog-reincarnation-enjoyer Javier Milei won a shock victory in Argentina’s presidential election, setting the stage for profound economic reforms - if he can push them through:

This is a surprising discrepancy between two moderately active questions (top: Manifold; bottom: Metaculus). I can’t see any distinction in the resolution criteria that would explain this. (UPDATE: See comment from Jacob here)

Moving on to the Middle East:

The best realistic medium-term outcome I can imagine for the people of Gaza is as something like a West Bank without settlements and roadblocks. I don’t see them as getting independence (Israel won’t allow it medium-term). Hamas rule means perpetual blockade and intermittent warfare. But the West Bank has reached a stalemate where it’s at least somewhat not a prison. And Israel’s previous commitment not to do settlements in Gaza (if maintained) would make a West-Bank-style Gaza better off than the real West Bank.

It sounds like step one toward that goal would be for Israel to defeat Hamas, but what happens after that? An Israeli occupation would involve constant bloody resistance; I’m not convinced it would be any better for people on the ground than Hamas (though someone can try to convince me otherwise). Could PLO, UN, or some puppet state maintain a balance of being anti-Israel enough that the Gazans don’t immediately revolt, but not so anti-Israel that Israel keeps the blockade or represses the area too hard for anyone to live a normal life? It’s a tiny sliver of a chance for a barely-okay outcome, but it’s the main one I can think of.

Moving to something less depressing, here’s the ever-popular TIME Person Of The Year market:

Source: Polymarket

Source: Polymarket

Source: Kalshi

Source: Kalshi

Second one is out of order, but these basically agree. Why is Taylor Swift so high? I understand she’s a very famous pop star, but hasn’t she been an equally famous pop star every one of the past ten years?

Other Links

1: Last month, we talked about the CFTC (regulatory body) denying Kalshi’s request to have election questions on their prediction market. Kalshi is now suing the CFTC to reverse their decision, saying that “the contracts contain no unlawful acts prohibited under the Commodity Exchange Act and therefore, the CFTC has no power to block them”. I have to admit I’m surprised by this - I thought Kalshi was trying to cultivate good will with the CFTC, and this seems pretty adversarial. Also, if they win, what’s left of the CFTC’s ability to regulate prediction markets at all? Any regulation experts want to weigh in?

2:@AISafetyMemes and @betafuzz have made a graph of different people’s probability estimates of AI causing human extinction. I couldn’t confirm (or did disconfirm) a few of their sources, which I’ve indicated in red; they can contact me if they want to tell me I’m wrong:

Specifically: Paul said 50% of severe problems but only ~15% extinction. The “average AI engineer” number is from a survey with likely response bias. The extinction tournament numbers given in the original are for catastrophe, not extinction. I cannot find a source for the average American number - it doesn’t seem to be in the linked Rethink Priorities report. Let me know if you can find it.

Specifically: Paul said 50% of severe problems but only ~15% extinction. The “average AI engineer” number is from a survey with likely response bias. The extinction tournament numbers given in the original are for catastrophe, not extinction. I cannot find a source for the average American number - it doesn’t seem to be in the linked Rethink Priorities report. Let me know if you can find it.

3: New Metaculus tournaments opening, including respiratory illnesses and the Global Pulse Tournament (with $1500 in prizes).

4: Manifold might be planning a prediction market dating show - go here for more info or to sign up as a contestant. This has got to be a stunt by Aella, but that doesn’t necessarily mean it won’t be fun.