Mantic Monday 1/29/24

Do Prediction Markets Have An Election Problem?

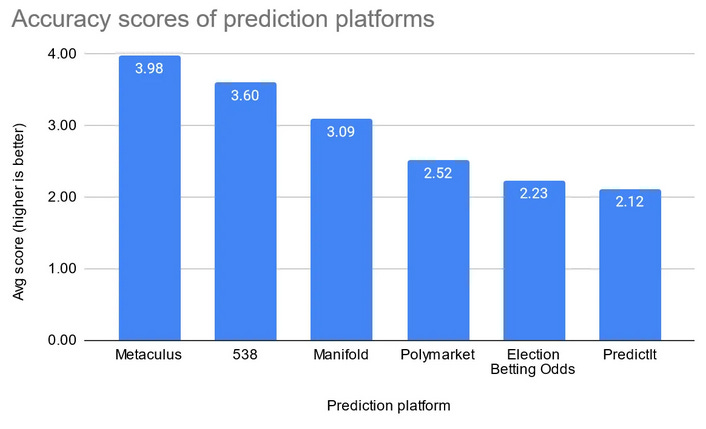

This is the claim of an article by Jeremiah Johnson in Asterisk Magazine. The key graphic is this:

Original source: First Sigma, who remind us that this is just one election cycle and we shouldn’t update too hard on it.

Original source: First Sigma, who remind us that this is just one election cycle and we shouldn’t update too hard on it.

The two real-money prediction markets get 4th and 6th place.

And Johnson chronicles other problems with them. For example, long after the media called the 2020 election for Biden, PredictIt held on to a ~9% chance of a Trump landslide so massive it could not possibly have occurred even if he won all of his voting fraud challenges. Betting sites favored Biden to win all swing states, but Trump to win overall. The whole thing was a mess.

Johnson makes a pretty reasonable guess about the cause: lots of dumb money. People use their pocketbooks to root for their favorite candidate. Normally in a functioning market smart money would take the other side and set the final price, but the high transaction costs, long waits, and regulatory limits on prediction markets mean it’s not generally worth smart money’s time to correct the mispricing; Goldman Sachs isn’t going to hire statistics PhDs to make a model just so they can bet $850 on PredictIt.

All of this is true, so let me say a few words in prediction market’s defense.

First of all, my allegiance has always been to forecasting in general, of which prediction markets are just a particularly flashy sub-category. So I find it encouraging that forecasting site Metaculus beats 538, usually considering the gold standard for political prediction.

I also find it encouraging that the play-money prediction market site Manifold comes pretty close and beats all the real-money sites. Nate Silver is only one person, he has only one area of expertise, and you can’t hire him to predict random things for you (unless you’re rich and he’s bored). If Manifold can apply only-slightly-sub-Nate-Silver levels of analysis at scale to arbitrary topics, that’s a big deal.

As for the real-money prediction markets, yeah, they seem worse than other options. But solar power was worse than other options in 1990. They’re a fledgling technology, we have strong reasons to think they’ll work when they’re mature, and we know what we need to do to help them grow. Unlike 538, Metaculus, and play-money markets, they have bias-resistance properties that could be really useful if they ever get big.

So one way to think of this is that non-market forecasting systems will outperform market systems when the markets are small and immature, but we might expect this to change as they get bigger. If that’s true, Johnson reminds us we’re not there yet. You can find further discussion of the article on r/slatestarcodex and Hacker News.

Asterisk is now releasing its issues piecemeal; along with Johnson on prediction markets, Issue Five includes articles on airline safety, PEPFAR, developing-world democracy, and a review of Going Infinite.

[EDIT: Maxim Lott says this was a 2022-specific problem, and overall markets have tied 538]

Is Trump On Top?

The latest round of polls shows voters preferring Trump to Biden in 2024:

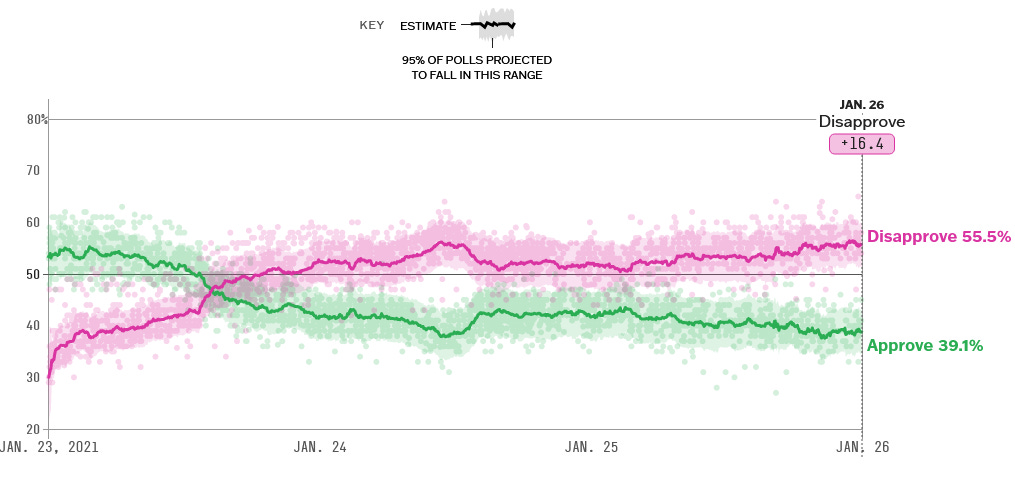

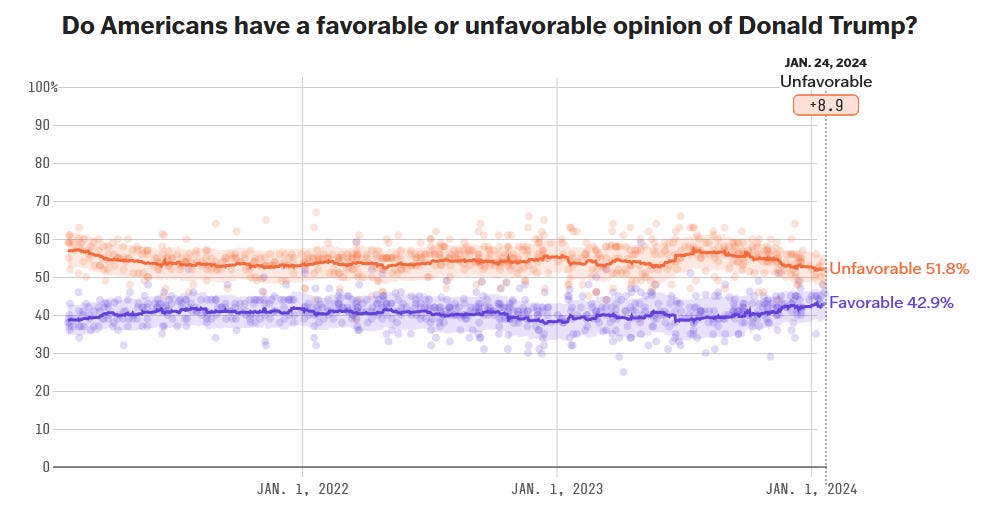

Biden’s popularity has been going down since the beginning of 2023:

And Trump’s has been going up since the same time:

Why? All the things voters might blame Biden for - inflation, Ukraine, Gaza - happened either well before or well after the early-2023 period when his numbers began to decline. So I’m not sure. Maybe people just got fed up?

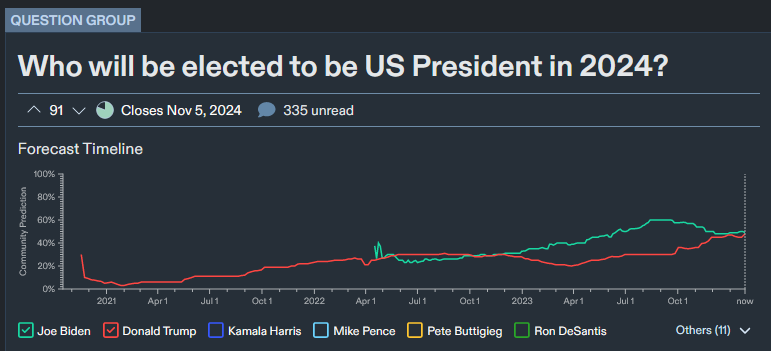

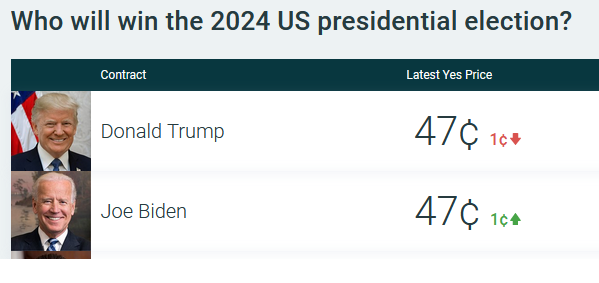

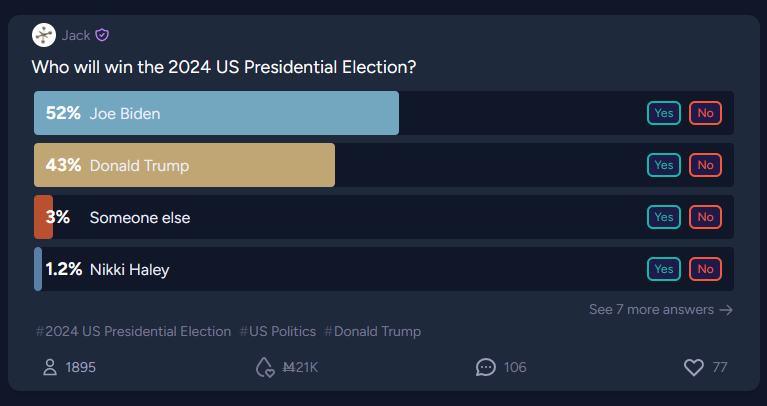

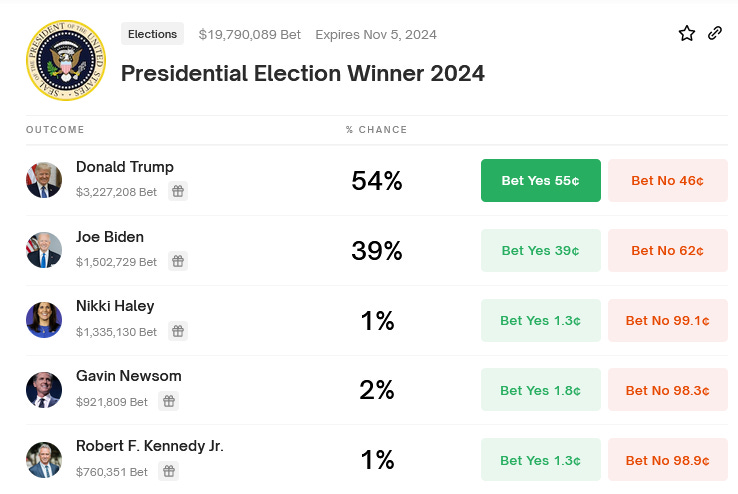

Vox has a standard article about how we can’t be sure whether bad polls are bad, or whether they don’t matter this far before an election. This ought to be exactly the kind of problem prediction markets are good for, but:

…Metaculus and PredictIt are 50-50, Manifold favors Biden, and Polymarket favors Trump. Shouldn’t really be possible, should it?

This is probably the problem Johnson mentioned above. Manifold has lots of young people and rationalists, who probably lean Biden. Polymarket has lots of degenerate gamblers who use VPNs, who probably lean Trump.

But as we saw above, the two best sources for politics have previously been Metaculus and Nate Silver. Nate Silver says (unofficially, here) that “If we do get a Trump-Biden rematch, I think the odds are roughly 50/50.” Metaculus agrees. So fine. The real odds are 50-50.

I do think you could probably make a lot of real money in expectation buying Biden on Polymarket - if you were a degenerate gambler with a VPN, of course.

Aaronson’s Five AI Worlds

One forecasting flaw is that it can only distribute probability between pre-selected outcomes. Usually those outcomes are “yes” or “no” on a binary event.

For slightly more complicated events like the US Presidential Election, you can sort of chain these together - will Biden win the nomination Y/N? Will Biden win the Presidency Y/N? If Trump loses, will he concede gracefully Y/N? - and sort of get a picture of what might happen.

For much more complicated events, it’s not really obvious how to do this.

Here’s an experiment courtesy of Metaculus, Scott Aaronson, and Boaz Barak. Aaronson and Barak wrote a blog post trying to divide AI scenarios into five categories, which Metaculus summarizes as:

AI-Fizzle. In this scenario, AI “runs out of steam” fairly soon. AI still has a significant impact on the world (so it’s not the same as a “cryptocurrency fizzle”), but relative to current expectations, this would be considered a disappointment. Rather than the industrial or computer revolutions, AI might be compared in this case to nuclear power: people were initially thrilled about the seemingly limitless potential, but decades later, that potential remains mostly unrealized.

Futurama. In this scenario, AI unleashes a revolution that’s entirely comparable to the scientific, industrial, or information revolutions (but “merely” those). AI systems grow significantly in capabilities and perform many of the tasks currently performed by human experts at a small fraction of the cost, in some domains superhumanly. However, AI systems are still used as tools by humans, and except for a few fringe thinkers, no one treats them as sentient. AI easily passes the Turing test, can prove hard theorems, and can generate entertaining content (as well as deepfakes). But humanity gets used to that, just like we got used to computers creaming us in chess, translating text, and generating special effects in movies.

AI-Dystopia. The technical assumptions of “AI-Dystopia” are similar to those of “Futurama,” but the upshot could hardly be more different. Here, again, AI unleashes a revolution on the scale of the industrial or computer revolutions, but the change is markedly for the worse. AI greatly increases the scale of surveillance by government and private corporations. It causes massive job losses while enriching a tiny elite. It entrenches society’s existing inequalities and biases. And it takes away a central tool against oppression: namely, the ability of humans to refuse or subvert orders.

Singularia. Here AI breaks out of the current paradigm, where increasing capabilities require ever-growing resources of data and computation, and no longer needs human data or human-provided hardware and energy to become stronger at an ever-increasing pace. AIs improve their own intellectual capabilities, including by developing new science, and (whether by deliberate design or happenstance) they act as goal-oriented agents in the physical world. They can effectively be thought of as an alien civilization–or perhaps as a new species, which is to us as we were to Homo erectus. Fortunately, though (and again, whether by careful design or just as a byproduct of their human origins), the AIs act to us like benevolent gods and lead us to an “AI utopia.” They solve our material problems for us, giving us unlimited abundance and presumably virtual-reality adventures of our choosing.

Paperclipalypse. In “Paperclipalypse” or “AI Doom,” we again think of future AIs as a superintelligent “alien race” that doesn’t need humanity for its own development. Here, though, the AIs are either actively opposed to human existence or else indifferent to it in a way that causes our extinction as a byproduct. In this scenario, AIs do not develop a notion of morality comparable to ours or even a notion that keeping a diversity of species and ensuring humans don’t go extinct might be useful to them in the long run. Rather, the interaction between AI and Homo sapiens ends about the same way that the interaction between Homo sapiens and Neanderthals ended.

I think Paperclipalypse requires human extinction before 2050. It’s at 11%. But Metaculus’ direct “human extinction by 2100” market is only at 1.5%. Either I’m missing something, or something’s wrong. My guess: different populations of forecasters looking at each question.

This Month In The Markets

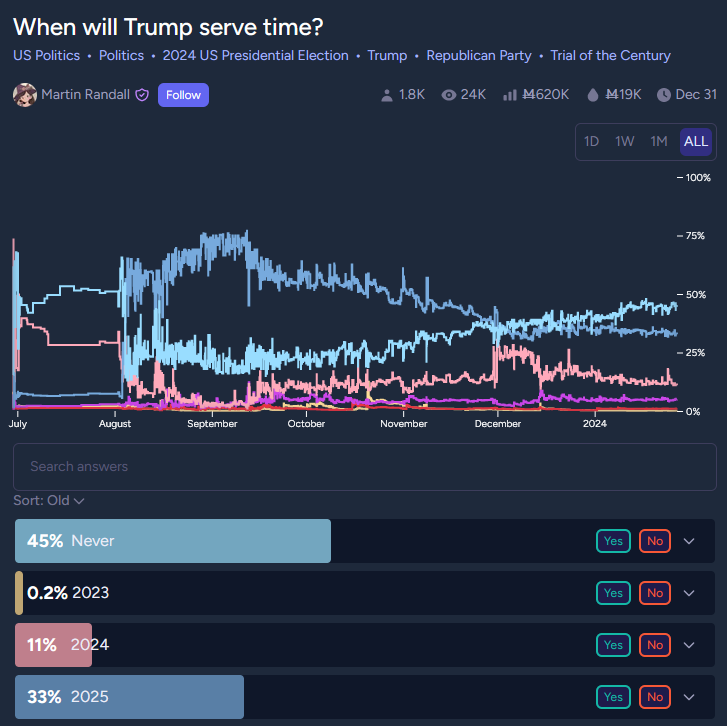

I haven’t been following the Trump Legal Issues Cinematic Universe, so I was surprised to see “Trump won’t go to jail” climbing so consistently. Maybe this is just because he has a higher chance of becoming President before the trials are over?

I was surprised to see this one; I’d previously seen that only 15% of Israelis wanted him to stay after the war was over. But he’s now up to 32%. Why? He doesn’t seem to be handling the war very well. I think the difference might just be that the first poll asked if he was good, and the second poll asked if there was anyone else better.

Both 15% and 32% are low numbers, but Israelis in the comments bring up that there aren’t scheduled elections in 2024. So if Bibi didn’t resign and his coalition partners didn’t desert him, he could potentially cling on. And nobody clings to power more ferociously than Benjamin Netanyahu.

That recent spike comes from Musk’s claim three hours ago that the first Neuralink has been implanted into a human being.

It looks like things have gone downhill for Ukraine since about October. Related:

What am I missing here? It’s got to be higher than 8%, right?

Short Links

1: For the past few years, ACX has run a yearly forecasting contest. This year I’m too busy, and have outsourced it to Metaculus. I linked it in an Open Thread and meant to link it here before it closed, but it took too long and I missed the January 21 end date. Still, you can find it here. I realize I still have to judge last year’s contest. I predict this will happen in February, when a friend and I both have some more time to work on it.

2: Manifold.Love added OKCupid-style compatibility questions and match percentage, but they got rid of the prediction markets. I can’t say the prediction market feature really worked so well. Still, it’s sad. Site administrator James Grugett says:

I’ve temporarily removed the whole “Add matches” and betting UI for Manifold Love prediction markets. I hope to bring back something a bit better (and more useful!) via an opt-in premium feature.

3: Superforecaster Robert de Neufville interviews Michael Story of the Swift Centre, a group trying to get policymakers to pay attention to (and maybe pay for) good forecasts.

4: Matt Yglesias grades his predictions for 2023, finds his calibration is improving (paid post). He also forecasts “a good election for Republicans [this] year”, including a 60% chance Trump wins.

5: Jake Gloudemans, who won Metaculus’ Quarterly Cup forecasting prize, reflects on his experience and strategy. He says he’s new to forecasting and shouldn’t be that good and so maybe the real experts just sat this one out - but he also says:

I would also add that I joined a different forecasting site, Manifold Markets back in August, and in 3 months have turned the 500 starting ‘Mana’ you get when you sign up into 8500 mana, and have specifically made a point to not do any research and just buy/sell based on intuition. Again, not sure what to conclude here, but it seems very possible that these sites are just full of people who are terrible at predicting things, such that it’s easy to do quite well by just being half-decent.

Maybe, but remember that from the inside, being good at something just feels like everyone else being inexplicably bad!