Mantic Monday 3/21/22

Warcasting

Changes in Ukraine prediction markets since my last post March 14:

-

Will Kiev fall to Russian forces by April 2022?: 14% —→ 2%

-

Will at least three of six big cities fall by June 1?: 70% —→ 53%

-

Will World War III happen before 2050?: 21% —→20%

-

Will Putin still be president of Russia next February?: 80% —→ 80%

-

Will 50,000 civilians die in any single Ukrainian city?: 12% —→ 10%

-

Will Zelinskyy no longer be President of Ukraine on 4/22?: 20% —→15%

If you like getting your news in this format, subscribe to the Metaculus Alert bot for more (and thanks to ACX Grants winner Nikos Bosse for creating it!)

Insight Prediction: Still Alive, Somehow

Insight Prediction was a collaboration between a Russia-based founder and a group of Ukrainian developers. So, uh, they’ve had a tough few weeks.

But getting better! Their founder recently announced on Discord:

I myself am (was?) an American professor in Moscow. I have been allowed to teach my next course which starts in 10 days online, and so I am moving back to the US on Sunday, to Puerto Rico. Some of our development team is stuck in Ukraine. I’ve offered to move them to Puerto Rico, but it’s not clear they’ll be able to leave the country anytime soon. Progress with the site may be slow, but obviously that’s not the most important thing now.

And:

I am now out of Russia, and on to Almaty, Kazakhstan. The people here are quite anti-war. I fly to Dubai in a bit. It was surprisingly difficult (and expensive) to book a ticket out of Moscow after all the airspace closures.

And:

I have made it to Puerto Rico! Thus, the base of Insight Prediction is now here in the US.

Out of the frying pan (Vladimir Putin) and into the fire (the CFTC). Still, welcome, and glad to hear you’re okay. Meanwhile, the Ukrainian development team continues to do good (can I say “heroic”?) work:

Our devs have fixed this issue [a bug when buying multiple shares], and are now pushing it to our beta server. Our main dev did this on his birthday, and with the threat of a nuclear accident at a nearby nuclear power plant bombed by the Russians.

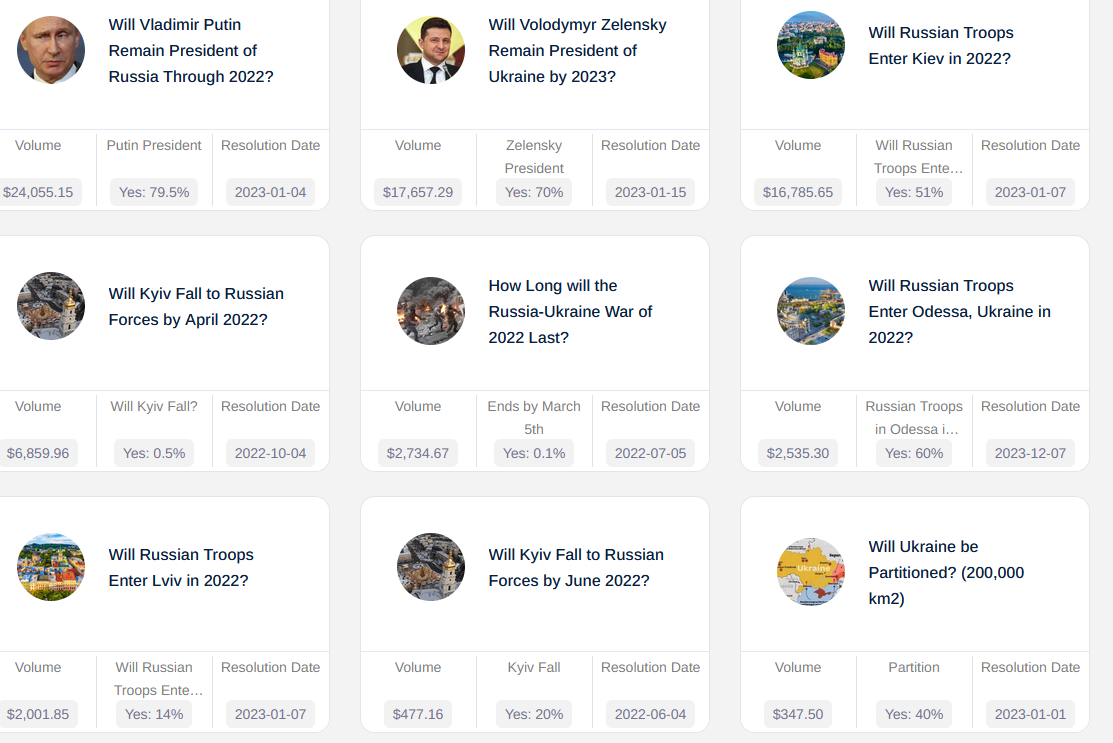

The upshot of all of this is that Insight is one of the leading platforms for predicting the Ukraine War right now:

Now that there are two good-sized real-money prediction markets, we can compare them. For example, the first question, on Putin, is at 79.5%, which is reassuringly close to the same question on Polymarket, at 76%.

On the other hand, the third market (will Russian troops enter Kiev?, currently at 52%) is almost a direct copy of this Metaculus question , which is currently at 92%. Why? The only difference I can find is that Insight requires two media sources to resolve positively and Metaculus only one - surely people don’t think there’s a 40% chance troops will enter Kyiv but only one source will report it?

Further Insight

Also in Insight News: after escaping Russia, the founder of Insight has decided to go public with his real identity:

The founder of Insight Prediction is Douglas Campbell, who holds a Ph.D. from the University of California, Davis. He is a former Staff Economist on President Obama’s Council of Economic Advisors, and prior to that was a Modelling Analyst in the targeting department of the Democratic National Committee. Currently he is also an Assistant Professor at the New Economic School.

It’s exciting to have someone so influential involved in the field!

Campbell has since written a blog post apologizing for and explaining Insight’s relatively inaccurate predictions about the beginning of the war:

Insight Prediction had its first successful market, at least financially, on whether Russia would Invade Ukraine, doing nearly $400,000 in volume. I would say that while the market was successful beyond what we would have expected, we still walked away unhappy with how the market went in terms of accuracy. Metaculus at one point had something like a 50% higher probability of an invasion . . . experts were saying a war was imminent. On the eve of battle, we were only at 78%. They were right and we were wrong. What happened?

Part of the story is that the vast majority, and at the time of resolution, quite possibly all of the “No” shares in our market were purchased by one whale. (This user’s nickname comes from the famous Ukrainian poet Gogol’s novel “Taras Bulba”. I would not usually share this information, but it is already public.) When you have a new platform with only a handful of traders, one whale can move the price a lot.

However, he doesn’t deserve all of the blame. I could have moved the price myself, but I did not. Ultimately, the responsibility for our predictions rests with me. Aside from providing some liquidity on both sides of the market, which left me with too many “No” shares that I didn’t unwind until it was blindingly obvious, I basically missed out. Other participants didn’t move the market as much as they could have either. So, as good as someone who made $20,000 might feel, they missed a golden opportunity to have made a quick $200,000, so maybe they shouldn’t feel so great about the outcome either.

I don’t think anyone should have to apologize for not moving a prediction market enough, but at least that explains what’s going on. Also, RIP Taras Bulba :(

ACX 2022 Prediction Contest Data

Several hundred of you joined me in trying to predict 70ish events this year. Sam Marks and Eric Neyman kindly processed all the data. You can find everything suitable for public release here. If you want the full dataset, including individual-level information about each predictor, you can fill out a request form here.

What is “aggregate”? Sam and Eric applied a couple of tricks that have improved these kinds of forecasts in the past:

First, using the geometric mean of odds rather than the average of the probabilities. See here for a writeup of why this is a reasonable thing to do.

Second, the idea of extremizing (pushing the aggregate away from the prior – the prior being the information that is common to all forecasters, which in this case is your prediction – so as to not overweight it). This works well empirically; see also here for Jaime Sevilla’s writeup of my paper on the topic.

Third, how much to extremize? Apparently a factor of 1.55 is empirically optimal based on data from Metaculus. But also, I think that the more forecasters agree with each other, the less it makes sense to extremize (basically because they’re probably all using the same information, so it makes more sense to treat them all as a single forecaster). As far as I know this idea isn’t written about anywhere, but I’ll probably write about it on my blog sometime. For the spreadsheet I did an ad-hoc thing to take this into account.

If you think you know more tricks, produce your own aggregate (this would be an excellent reason to request the full data) and send it to me. If it turns out more accurate than Sam and Eric’s, I’ll give you some kind of recognition and prize.

The real dataset also has a “market” baseline that I didn’t include above. It’s mostly based off Manifold questions, but Manifold hadn’t really launched yet and most of them only had one or two bets and were wildly off everyone else’s guesses. I don’t think this is going to be a fair test of anything. Now that I know Sam and Eric are willing to put work into this, I’ll figure out something better for next year.

In fact, I think a coordinated yearly question set to use as a benchmark could be really good for this space. Right now there’s no easy way to compare eg Metaculus to Polymarket because they both use really different questions. I’m hoping to get people together next year, come up with a standard question set, and give it to as many platforms (and individuals!) as possible to see what happens.

Shorts

1: AI researcher Rodney Brooks grades his AI-related predictions from 2018.

2:An Analysis Of Metaculus’ Resolved AI Predictions And Their Implications For AI Timelines. “Overall it looked like there was weak evidence to suggest the community expected more AI progress than actually occurred, but this was not conclusive.“

3: An interesting counterexample: When Will Programs Write Programs For Us? The community prediction bounced between 2025 and 2028 (my own prediction was in this range). Even in late 2020, just before the question stopped accepting new predictions, the forecast was January 2027. The real answer was six months later, mid-2021, when OpenAI released Codex. I don’t want to update too much on a single data point, but this is quite the data point. If I had to cram this into the narrative of “not systematically underappreciating speed of AI progress”, I would draw on eg this question about fusion, where the resolution criteria (ignition) may have been met by an existing system - tech forecasters tend to underestimate the ability of cool prototypes to fulfill forecasting question criteria without being the One Amazing Breakthrough they’re looking for.

4: New (to me) site, Hedgehog Markets. So far they mostly just have entertaining tournaments, but the design is pretty and they seem interested in genuinely solving the decentralization problem, so I’ll be watching them.

5: New (to me) site, Aver. So far not many interesting markets, and very crypto-focused, but somehow (?) they have six-figure pools on several questions. Got to figure out what’s going on here.

6: New (to me) s . . . you know what, just assume there are a basically infinite number of crypto people starting not-entirely-confidence-inspiring prediction markets right now:

The long wait has come to an end! 🔥 🏛 DELPHIBETS, the first prediction market platform exclusively on @radixdlt goes public 🚀 Follow us to make sure you don’t miss anything: 📱 Telegram: t.me/delphibets ⚠️ First announcement: t.me/delphibets/33

71Likes36Retweets](https://twitter.com/delphibets/status/1503101907719667717)

[ Kmz Kmz |

UltiBets @Kmz_kmz_Couldn’t agree more on this take ! That’s why I founded @UltiBets, the first multichain betting /prediction market protocol ! We have our @UltiBets IDO tomorrow on @thorstarter so nice coincidence to read this tweet  mhonkasalo @mhonkasaloPrediction markets are still the big underrated (eventual) use case unlocked by blockchains.](https://twitter.com/_Kmz_kmz/status/1505941121133953035)[4:15 PM ∙ Mar 21, 2022 mhonkasalo @mhonkasaloPrediction markets are still the big underrated (eventual) use case unlocked by blockchains.](https://twitter.com/_Kmz_kmz/status/1505941121133953035)[4:15 PM ∙ Mar 21, 2022 |

4Likes2Retweets](https://twitter.com/Kmz_kmz/status/1505941121133953035)

RedChillies Labs (REDC) @RedChilliesLabs

RedChillies Labs (REDC) @RedChilliesLabs

Finally, the hottest prediction market is now live. Predict the price of $ZIL token by March 31st. Link: zilchill.com/user-market/10… #RedChillies #ZilChill #PredictionMarket

[4:39 AM ∙ Mar 10, 2022

18Likes4Retweets](https://twitter.com/RedChilliesLabs/status/1501779846212603904)

teeblaqjae✌ @teeblaqjaePrediction Market allowing users to predict outcomes of sports, eSports and other gaming events in a Decentralized manner and earn GFX rewards.

teeblaqjae✌ @teeblaqjaePrediction Market allowing users to predict outcomes of sports, eSports and other gaming events in a Decentralized manner and earn GFX rewards.  10:22 PM ∙ Mar 9, 2022

10:22 PM ∙ Mar 9, 2022

89Likes73Retweets](https://twitter.com/AltGemHunter/status/1499784163167436805)

If any of them start looking more important than the others, I’ll let you know.

DELPHIBETS @delphibets

DELPHIBETS @delphibets t.meJonas in DELPHIBETS 🤝 Community⚠️ First Announcement! ⚠️ The Radix ecosystem has a new baby 🚀 It’s time to introduce you to a new project – the first prediction market exclusively on Radix: 🏛 DELPHIBETS – the social oracle. With the launch of Babylon, DELPHIBETS will provide an intuitive prediction market platform. You wil…

t.meJonas in DELPHIBETS 🤝 Community⚠️ First Announcement! ⚠️ The Radix ecosystem has a new baby 🚀 It’s time to introduce you to a new project – the first prediction market exclusively on Radix: 🏛 DELPHIBETS – the social oracle. With the launch of Babylon, DELPHIBETS will provide an intuitive prediction market platform. You wil… 🚀 Altcoin Gems 💎 @AltGemHunter🏆2022 OSCARS PREDICTIONS🏆 ❓Oscar 2022 is about to begin! Who will win the Best Actor 2022 Oscar? Which film will win as the Best Picture? 🌎Find it out together with a #DeFi Prediction market @oracula_io!

🚀 Altcoin Gems 💎 @AltGemHunter🏆2022 OSCARS PREDICTIONS🏆 ❓Oscar 2022 is about to begin! Who will win the Best Actor 2022 Oscar? Which film will win as the Best Picture? 🌎Find it out together with a #DeFi Prediction market @oracula_io!