Mantic Monday 4/18/22

Warcasting

Changes in Ukraine prediction markets since my last post March 21:

-

Will at least three of six big cities fall by June 1?: 53% → 5%

-

Will World War III happen before 2050?: 20% →22%

-

Will Putin still be president of Russia next February?: 80% → 85%

-

Will 50,000 civilians die in any single Ukrainian city?: 10% → 10%

If you like getting your news in this format, subscribe to the Metaculus Alert bot for more (and thanks to ACX Grants winner Nikos Bosse for creating it!)

Nuclear Risk Update

Last month superforecaster group Samotsvety Forecasts published their estimate of the near-term risk of nuclear war, with a headline number of 24 micromorts per week.

A few weeks later, J. Peter Scoblic, a nuclear security expert with the International Security Program, shared his thoughts. His editor wrote:

I (Josh Rosenberg) am working with Phil Tetlock’s research team on improving forecasting methods and practice, including through trying to facilitate increased dialogue between subject-matter experts and generalist forecasters. This post represents an example of what Daniel Kahneman has termed “adversarial collaboration.” So, despite some epistemic reluctance, Peter estimated the odds of nuclear war in an attempt to pinpoint areas of disagreement.

In other words: the Samotsvety analysis was the best that domain-general forecasting had to offer. This is the best that domain-specific expertise has to offer. Let’s see if they line up:

Superficially not really! In contrast to Samotsvety’s 24 micromorts, Scoblic says 370 micromorts, an order of magnitude higher.

Most of the difference comes from two steps.

First , conditional on some kind of nuclear exchange, will London (their index city for where some specific person worrying about nuclear risk might be) get attacked? Samotsvety says only 18% chance. Scoblic raises this to 65%, saying:

This is the most problematic of the component forecasts because [Samotsvety’s estimate] implies a highly confident answer to one of the most significant and persistent questions in nuclear strategy: whether escalation can be controlled once nuclear weapons have been used. Is it possible to wage a “merely” tactical nuclear war, or will tactical war inevitably lead to a strategic nuclear exchange in which the homelands of nuclear-armed states are targeted? Would we “rationally” climb an escalatory ladder, pausing at each rung to evaluate pros and cons of proceeding, or would we race to the top in an attempt to gain advantage? Is the metaphorical ladder of escalation really just a slippery slope to Armageddon? This debate stretches to the beginning of the Cold War, and there is little data upon which to base an opinion, let alone a fine-grained forecast […]

What does this all mean for the forecast? Given the degree of disagreement and the paucity of data, it would not be unreasonable to assign this question 50/50 odds. . . . It is also worth noting that practitioners are not sanguine about this question. In 2018, General John Hyten, then head of U.S. Strategic Command, said this about escalation control after the annual Global Thunder exercise: “It ends the same way every time. It does. It ends bad. And bad meaning it ends with global nuclear war.”

So, I’d bump the 18% to the other side of “maybe” and then some: 65%.

Second , what is the probability that an “informed and unbiased” person could escape a city before it gets nuked? Samotsvety said 75%; Scoblic said 30%.

I think this is a fake disagreement. Some people I know were so careful that they had already left their cities by the time this essay was posted; the odds of this person escaping a nuclear attack are 100%. Other people are homebound, never watch the news, and don’t know there’s a war at all; the odds of these people escaping a nuclear attack are 0%. In between are a lot of different levels of caution; do you start leaving when the war starts to heat up? Or do you want until you hear that nukes are already in the air? Do you have a car? A motorcycle for weaving through traffic? Do you plan to use public transit? My guess is that the EAs who Samotsvety were writing for are better-informed, more cautious, and better-resourced than average, and the 75% chance they’d escape was right for them. Scoblic seems to interpret this question as saying that people have to escape after the nuclear war has already started, and his 30% estimate seems fine for that situation.

If we halve Scoblic’s estimate (or double Samotsvety’s) to adjust for this “fake disagreement” factor - then it’s still 24 vs. 185 micromorts, a difference of 8x.

What do we want - and what do we have the right to expect - from forecasting? If it’s order-of-magnitude estimates, it looks like we have one: we’ve bounded nuclear risk in the order of magnitude between 24 and 185 (at least until some third group comes around with something totally different than either of these two).

Or maybe it’s a better understanding of our “cruxes” - where the real disagreement is that accounts for almost all of the downstream uncertainty. In this case, this exercise is pretty successful - everyone is pretty close to each other on the risk of small-scale nuclear war, and the big disagreement is over whether small-scale nuclear war would inevitably escalate.

The Samotsvety team says they plan to meet, discuss Scoblic’s critiques, and see if they want to update any of their estimates. And they made what I consider some pretty strong points in the comments that maybe Scoblic will want to adjust on. Both sides seem to be treating this as a potential adversarial collaboration, and I’d be interested in seeing if this can bound the risk even further.

AI Risk “Update”

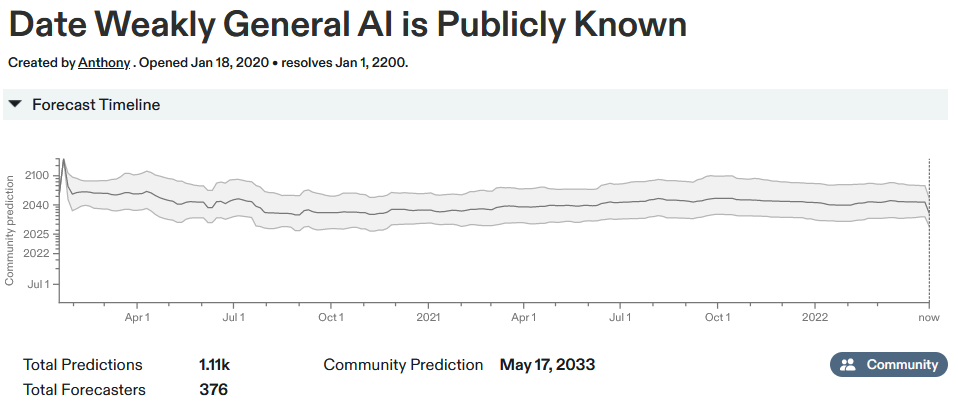

Everyone’s been talking about this Metaculus question:

”Weakly general AI” in the question means a single system that can perform a bunch of impressive tasks - passing a “Turing test”, scoring well on the SAT, playing video games, etc. Read the link for the full operationalization, but the short version is that this is advanced stuff AI can’t do yet, but still doesn’t necessarily mean “totally equivalent to humans in any way”, let alone superintelligence.

For the past year or so, this had been drifting around the 2040s. Then last week it plummeted to 2033. I don’t want to exaggerate the importance of this move: it was also on 2033 back in 2020, before drifting up a bit. But this is certainly the sharpest correction in the market’s two year history.

The drop corresponded to three big AI milestones. First, DALL-E2, a new and very impressive art AI.

(source)

(source)

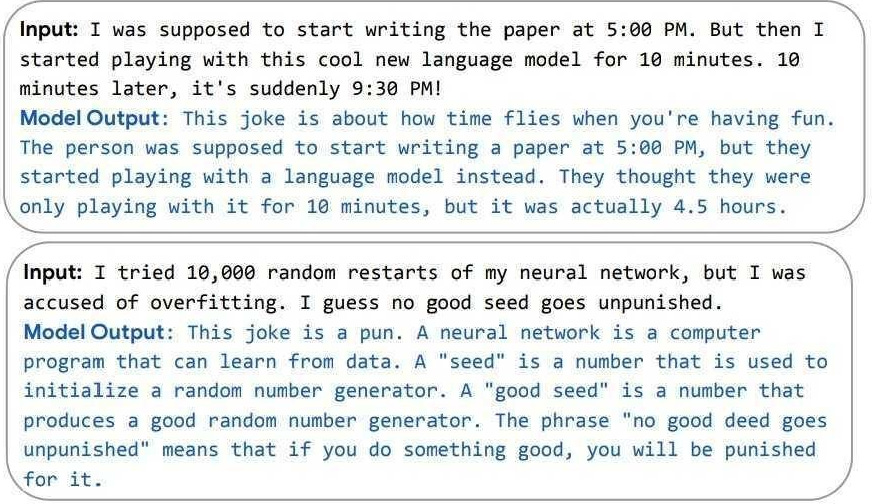

Second, PALM, a new and very impressive language AI:

PALM explaining jokes (source)

PALM explaining jokes (source)

Third, Chinchilla, a paper and associated model suggesting that people have been training AIs inefficiently all this time, and that probably a small tweak to the process could produce better results with the same computational power.

(there’s also this Socratic Models paper that I haven’t even gotten a chance to look at, but which looks potentially impressive)

This raises the eternal question of “exciting game-changer” vs. “incremental progress at the same rate as always”. These certainly don’t seem to me to be bigger game changers than the original DALL-E or GPT-3, but I’m not an expert and maybe they should be. It’s just weird that they used up half our remaining AI timeline (ie moved the date when we should expect AGI by this definition from 20 years out to 10 years out) when I feel like there have been four or five things this exciting in the past decade.

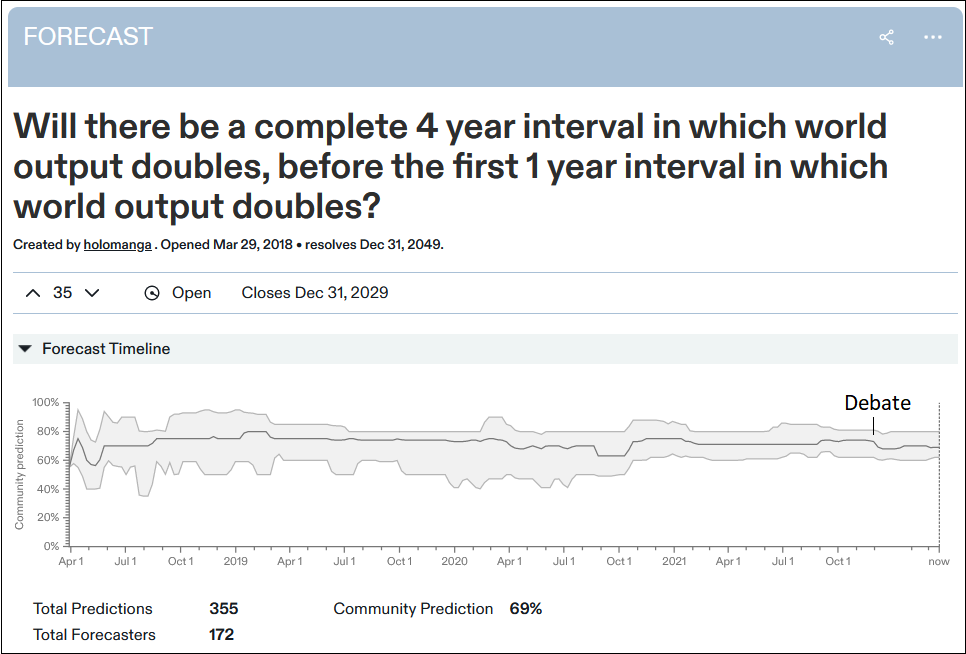

Or is there another explanation? A lot of AI forecasters on Metaculus are Less Wrong readers; we know that the Less Wrong Yudkowsky/Christiano debate on takeoff speeds moved the relevant Metaculus question a few percent:

Early this month on Less Wrong, Eliezer Yudkowsky posted MIRI Announces New Death With Dignity Strategy, where he said that after a career of trying to prevent unfriendly AI, he had become extremely pessimistic, and now expects it to happen in the relatively near-term and probably kill everyone. This caused the Less Wrong community, already pretty dedicated to panicking about AI, to redouble its panic. Although the new announcement doesn’t really say anything about timelines that hasn’t been said before, the emotional framing has hit people a lot harder.

I will admit that I’m one of the people who is kind of panicky. But I also worry about an information cascade: we’re an insular group, and Eliezer is a convincing person. Other communities of AI alignment researchers are more optimistic. I continue to plan to cover the attempts at debate and convergence between optimistic and pessimistic factions, and to try to figure out my own mind on the topic. But for now the most relevant point is that a lot of people who were only medium panicked a few months ago are now very panicked. Is that the kind of thing that moves forecasting tournaments? I don’t know.

Shorts

1:Will Elon Musk acquire over 50% of Twitter by the end of 2022?

Why are these two so different? Do lots of people expect Musk to acquire Twitter after June 1 but still in 2022?

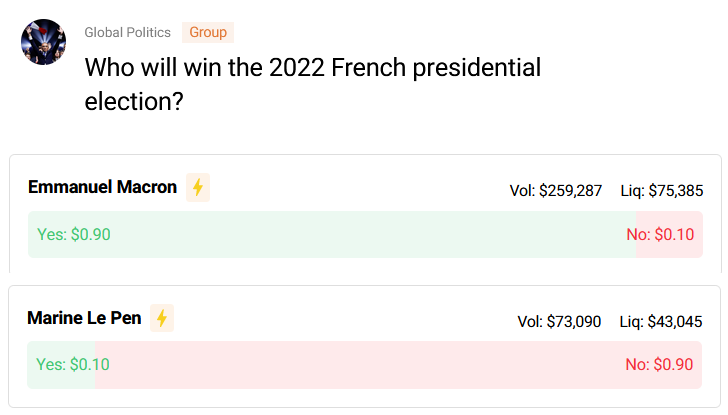

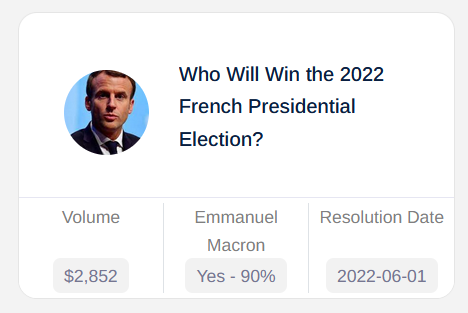

2:Will Marine Le Pen win the 2022 French presidential election?

Beautiful correspondence, beautiful volume numbers.

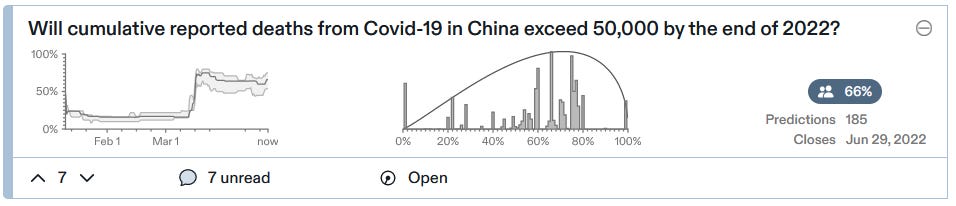

3:Will cumulative reported deaths from COVID-19 in China exceed 50,000 by the end of 2022?