Mantic Monday 5/9/22

Warcasting

Changes in Ukraine prediction markets since my last post April 18:

-

Will at least three of six big cities fall by June 1?: 5% → 2%

-

Will World War III happen before 2050?: 22% →25%

-

Will Putin still be president of Russia next February?: 85% → 80%

-

Peace or cease-fire before 2023?: 65% → 52%

-

Will Russia formally declare war on Ukraine before August?: (new) → 19%

Aborcasting

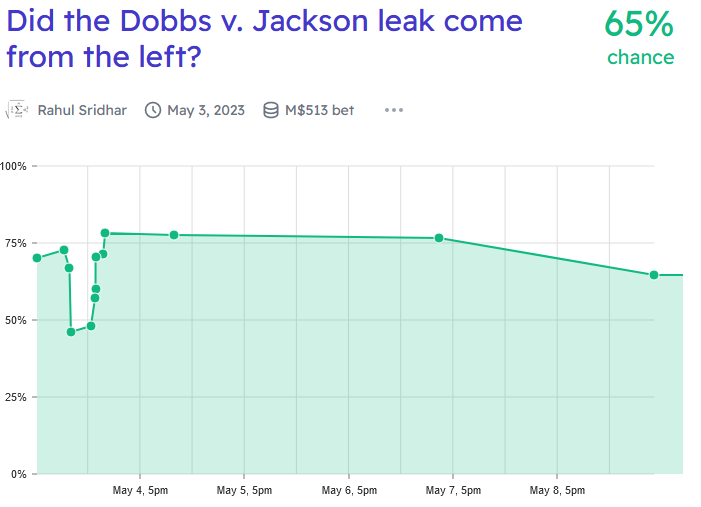

IE predicting the results of the recent Supreme Court link.

Quick summary: markets already expected that the Court would overturn Roe v. Wade (~70% soon), but this moved them closer to 95% immediately. Democrats’ chances in the mid-terms went up 3-5% on the news. Markets are extremely skeptical of claims that this will lead to bans on gay marriage or interracial marriage, or that the Democrats will respond with (successful) court-packing. A single very small and unreliable market says the leak probably came from the left, not the right.

Going through at greater length one-by-one:

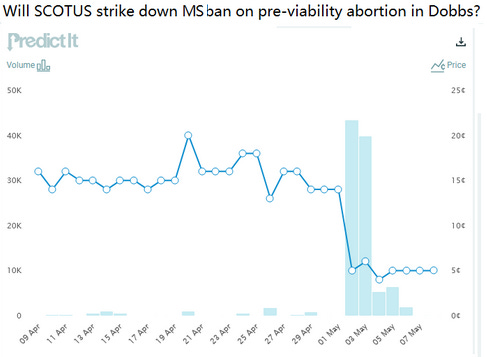

First: how much did the leak change predictions about the case itself? PredictIt had a market going, which said that even before the leak there was only a 15% chance the Court would make Mississippi allow abortions; after the leak, that dropped to 4%.

A Metaculus question on Roe v. Wade overturned by 2028 went from 70% to 95%:

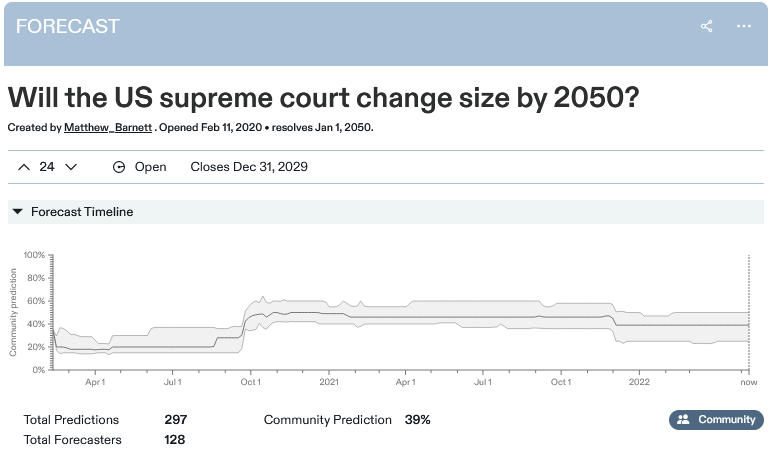

A question on court packing hasn’t moved at all, suggesting Metaculus doesn’t think this response is in the Democratic playbook.

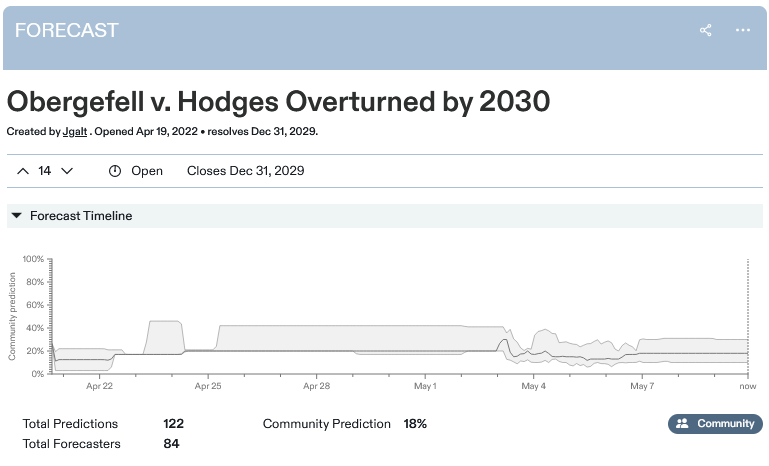

A question on Obergefell v. Hodges, with good participation both before and after the leak, shows no change in probability - it stays consistently around 18-20%.

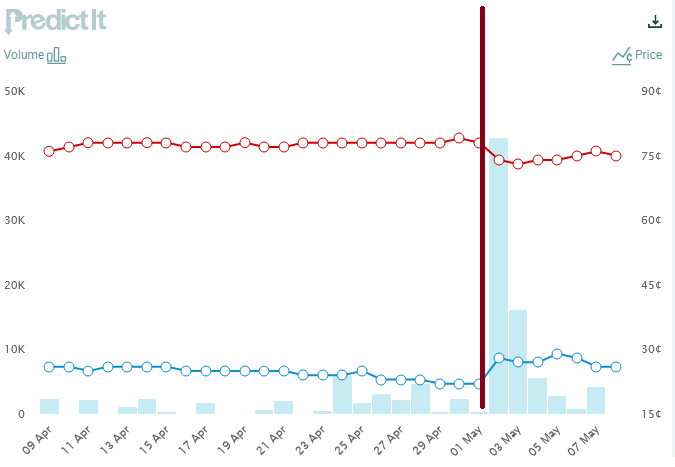

Here’s PredictIt on Republicans’ chances of taking the Senate in November:

The red line marks the Supreme Court leak. After a month of near-stability, Democrats’ chances went from 22% to 29%, before stabilizing around 26%. Markets on the Senate and on other sites like Polymarket tell a similar story.

This is as far as we can go without using Manifold. Manifold questions have much less volume than PredictIt or Metaculus, and I have much less confidence in them, but for the record, here are a few:

Disclaimer: I moved that one a bit myself, it was around 77% and I thought that was too high.

Despite the fearmongering, this one looks about right to me. Disclaimer that Manifold probably can’t handle probabilities this small correctly and there’s no reason to think 0.2% is more realistic than 2%. It’s not 10% though.

I couldn’t find some markets I wanted, so I’ve created them on Manifold for you to bet on:

By the way, in 2018, I got this horribly wrong - I said only a 1% chance of Roe v. Wade getting repealed in the next 5 years. I’ll comment on that further when I review that post in 2023.

What For-casting?

Many of you remain skeptical of prediction markets. It was one of the top answers on the What Opinion Of Scott/ACX Do You Disagree With? question on the subreddit, and there’s a relevant thread on the bulletin board too. So I guess it’s time to trot out my semi-annual lecture on why I think prediction markets are good.

A lot of the criticism focused on “are they really more accurate than the experts?” I have two answers there: first, a proof that (under certain assumptions) prediction markets should be at least as good as any other expert. Second, why this is the wrong question, and accuracy isn’t prediction markets’ killer app.

First the proof. Suppose that there was some specific expert who consistently outperformed prediction markets. For example, suppose Nate Silver was on average better than Polymarket. After this had been happening for a while, you would catch on. And then whenever Polymarket and Nate disagreed, you could bet money on Nate’s position on Polymarket and win. The exact amount you could make would depend on how much money was on the relevant Polymarket question and how strongly Nate and Polymarket disagreed, but as Polymarket gets bigger the limit tends toward infinity.

So with a big enough prediction market, one of two things must be true: either there is no outside expert who is clearly better than the market. Or you can get very rich easily. Either one seems pretty good.

(right now, Nate Silver is better than PredictIt. I noticed this last election and made a few thousand dollars. If PredictIt had been as big then as Polymarket was now, either I would have made a few hundred thousand dollars, or - much more likely - someone else would have been incentivized to beat me to the trade, and the market would be as accurate as Nate by the time I looked at it).

Just because nobody can consistently outperform prediction markets doesn’t mean people can’t do it once in a while. Scott Adams said that Trump would definitely win in 2016 when prediction markets were only saying 20% chance or so. This doesn’t mean Scott Adams is smarter than prediction markets, it means he got lucky. Nothing here rules out lucky people beating the market. It just rules out actually smart people beating the market so consistently that you can notice it before the problem gets corrected. Or at least, if this does happen, you can get very rich easily.

Moving on to the second point: I don’t think accuracy is the killer app. The killer apps are trust, aggregation, and clarity.

On the DSL threat, someone brought up that the US government was quicker to call the Russian invasion of Ukraine than most prediction markets. I agree this happened. In some sense, it’s unsurprising; the US government has spy satellites, moles in the Kremlin, and lots of highly-paid analysts. Of course they should do better than everyone else.

And yet “trust the US government” has so far failed to solve all of our epistemic problems. Partly this is because the US government constantly disagrees with itself (the FDA got in a fight with Biden over vaccine readiness; Trump’s EPA and Biden’s EPA made very different statements on climate change). Partly it’s because for internal political reasons or military/geopolitical reasons, the US government has lots of incentives to lie or stretch the truth. Partly it’s because other organizations with the same advantages as the US government make counterclaims (eg the Ukrainian government said their intelligence told them Russia wouldn’t invade, and they also seemed pretty trustworthy).

The point of prediction markets isn’t that they have good spy satellites. It’s to aggregate information from a bunch of different sources weighted by trust. We can’t trust any individual to do this, because they would just have their own bias (even if they started out unbiased, once they became important enough to matter, biased interests would take them over).

All prediction markets everywhere should give the same result. Proof: if this wasn’t true, you could get rich easily by buying the one with the lower number, shorting the one with the higher number, and making a consistent profit no matter what happened. This prediction is experimentally confirmed: different prediction markets are usually within a few percentage points of each other on the same question.

That makes them very different from newspapers / governments / pundits / etc. They form a natural waterline which can be taken as a precise readout of what the smartest and most-clued-in people think about a certain topic.

Prediction markets aren’t competing with Nate Silver or spy satellites, any more than the stock market is competing with investment banks and market analysts. Prediction markets are competing with newspapers, pundits, and government departments. They’re a source of dis-intermediated information, short-circuiting everyone who sets themselves up as authoritative intermediators. A prediction market future is one where disinformation, propaganda, and narrative construction - while not impossible - are constrained by everyone having access to the same facts, through a medium impossible for any single bad actor to influence.

Discorcasting

Manifold recently ran an unintentional, kind of crazy experiment in manipulating prediction markets.

Remember, Manifold is a place where anyone can start their own markets using play money. They’re still trying to come up with a business model, but so far you can spend real money to buy extra play money. Why would you do that? I don’t know, but to sweeten the pot, even a little real money can buy lots of play money. This is the background to the ACX Discord Moderator Market.

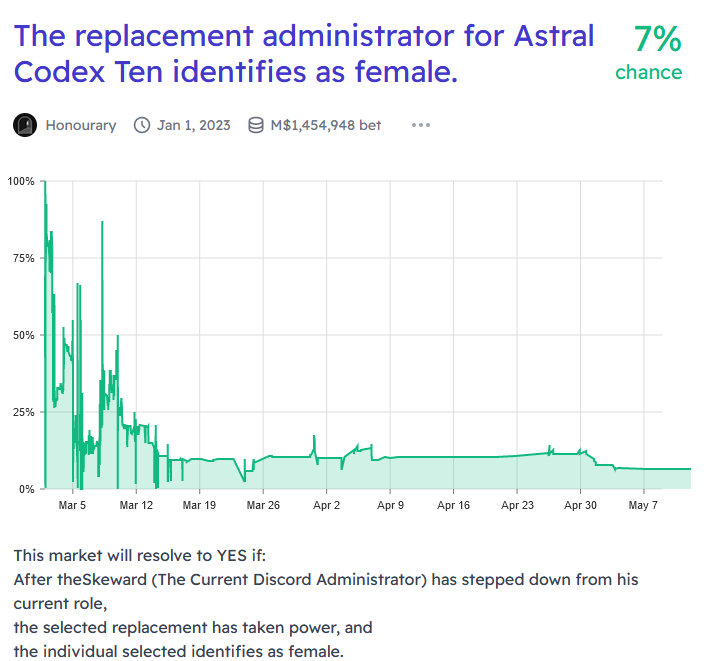

There’s a community on the chat app Discord associated with my blog. Earlier this year, the administrator announced they were going to step down and appoint a replacement, and some people on Manifold started speculating on who it would be. One of the markets that sprang up was “[Will] the replacement administrator for [ACX Discord] identify as female?”

My read is that the right answer for this is around 10%. A bunch of surveys have shown that the ACX community is about 90% male / 10% female, and the Discord seems pretty similar. There were no especially strong candidates for moderator, and the short list of names that got passed around were also about 90% male / 10% female. I’m just one guy, don’t trust me too much, but I would have gone with 10%.

What actually happened was - the market shot up near 100%. Everyone bet against it until it was back below 50-50, and then it shot up again. This cycle repeated 5-6 times, after which it finally stabilized around 10% (it’s at 7% now).

I don’t know exactly what went on here. My guess is that somebody spent thousands of dollars of real money testing whether it was possible to manipulate prediction markets. They got an absolute fortune in play money, then spent it all on trying to push this market up as high as it would go.

The end result: this has become the highest-volume market in the history of Manifold, with about 5x more volume than runners-up like “will Russia invade Ukraine?” The $1.4 million in play money corresponds to about $14,000 in real dollars. Not all of this was spent by the manipulator. Some of it was normal trading, and some of it was people betting against the manipulator. But if we assume that about half the trading on here was related to the manipulation, that suggests that the manipulator spent about $3,500 in real money, and then 50 to 100 other people invested all the play money they’d gotten through normal channels in bringing the probability back down again.

Again trying to make sense of all this: lots of people want prediction markets to succeed, Manifold is popular, and some people (including me) have donated money to them out of goodwill. Maybe someone who wanted to donate $3,500 decided that instead they’d use it to buy play money and manipulate a market, for the lulz. And then of course traders would trade on the massively mispriced market, instead of the normal ones that might only have tiny mispricings. And that would bring it back down to the default 10%.

A lot of times, people ask: what if some very rich person tried to manipulate a prediction market? Like, what if there was a market on whether Trump would win the presidency, and Trump himself dropped $100 million into making it say YES, either out of vanity or in the hopes that people would think he was “inevitable” and stop resisting.

And the theory has always been: if traders see a clearly mispriced market, they’ll rush to it and correct the mispricing in order to make easy money. Trump would be out $100 million and the market would stay correct.

We’ve never been able to test the theory, and until someone drops $100 million into a real money market, we never will. But this is pretty close. Someone spent $3,500 of real money on a play money market where most other people were trading with the equivalent of $10. Within a week, the market had soaked up a pretty good chunk of the trading resources in the entire play economy, and was back at the exact right level. I think if Trump ever tries market manipulation we’ll do just fine.

One weird coda to this story: the process of choosing the new administrator continues, and (surprisingly!) two of the leading candidates are female. Someone might be about to make a lot of money.

Morecasting

Some markets that interested me recently:

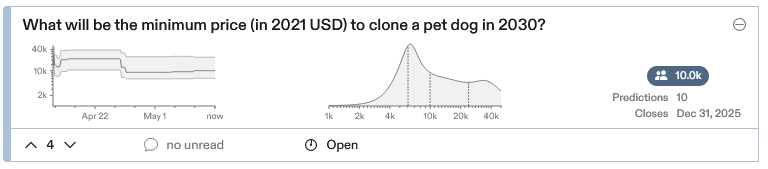

Metaculus on cloned dogs:

I was surprised they were asking about minimum price rather than whether it would be available at all, so I checked and - it’s already available? You can just get your dog cloned if you want? Currently it costs about $50,000. Weird way to find this out.

Metaculus on anti-aging:

This one’s been running since 2016, but I only just noticed it. I can’t say it’s wrong, it’s just strange to see such a wild possibility above the 50% level. It seems to have gradually gone down in the late teens, then picked back up again in 2020. A lot of commenters are making fun of this for being too low.

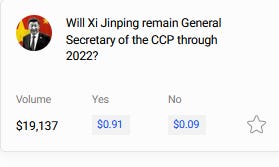

Metaculus on Xi:

Polymarket is less bullish:

…but it’s hard to tell if this is a genuine difference, or a result of the different questions (Xi loses election vs. loses power by any means including death).

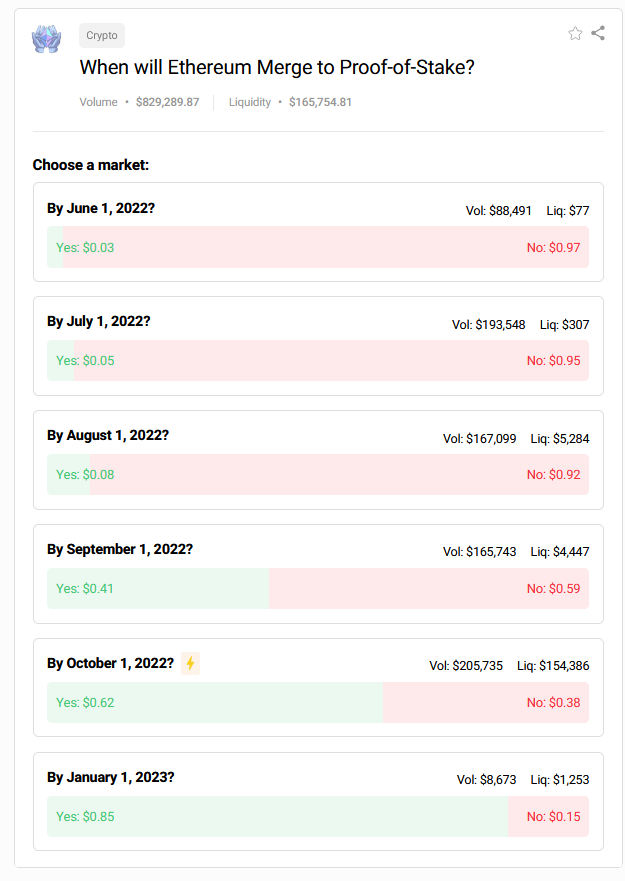

Polymarket on the Ethereum merge:

There are some interesting new prediction markets in the works - nothing ready for prime-time yet, but hopefully I’ll have something to report in the next month or two.