Mantic Monday 6/13/22

It’s been a while since we’ve done one of these, hopefully no major new crises started while we were . . . oh. Darn.

Mantic Monkey

Metaculus predicts 17000 cases and 400 deaths from monkeypox this year. But as usual, it’s all about the distribution

90% chance of fewer than 400,000 cases. 95% chance of fewer than 2.2 million cases. 98% chance of fewer than 500 million cases.

This is encouraging, but a 2% chance of >500 million cases (there have been about 500 million recorded COVID infections total) is still very bad. Does Metaculus say this because it’s true, or because there will always be a few crazy people entering very large numbers without modeling anything carefully? I’m not sure. How would you test that?

Warcasting

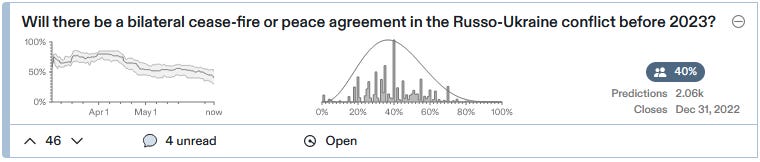

The war in Ukraine has shifted into a new phase, with Russia concentrating in Donetsk and Luhansk, and finally beginning to make good use of its artillery advantage. I’m going to stop following the old Kiev-centric set of questions and replace them with more appropriate ones:

Notice that this continues to rise, from 16% a month ago to 22% today.

See Eikonal’s comment here for some discussion of how this might happen and what territories these might be (and note that we switched from Ukrainian control in the last question to Russian control in this one).

I’m keeping this one in here, but it never changes.

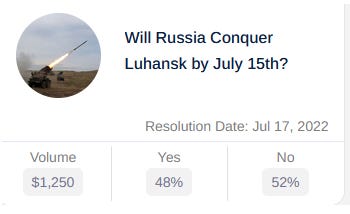

Meanwhile, on Insight Prediction:

$2000 in liquidity and still 14% off from Metaculus, weird.

Musk Vs. Marcus

Elon Musk recently said he thought we might have AGI before 2029, and Gary Marcus said we wouldn’t and offered to bet on it.

It’s an important tradition of AGI discussions that nobody can ever agree on a definition of it and it has to be re-invented every time the topic comes up. Marcus proposed five different things he thought an AI couldn’t do before 2029, such that if it does them, he admits he was wrong and Musk wins the bet (which purely hypothetical at this point; Musk hasn’t responded). The AI would have to do at least three of:

-

Read a novel and answer complicated questions about eg the themes (existing language models can do this with pre-digested novels, eg LAMDA talking about Les Miserables here - I think Marcus means you have to give it a new novel that it has no corpus of humans ever having discussed before, and make it do the work itself).

-

Watch a movie and answer complicated questions as above

-

Work as a cook in a kitchen

-

Write bug-free code of 10,000+ lines from natural language specifications

-

Convert arbitrary mathematical proofs from natural language to verifiable symbols

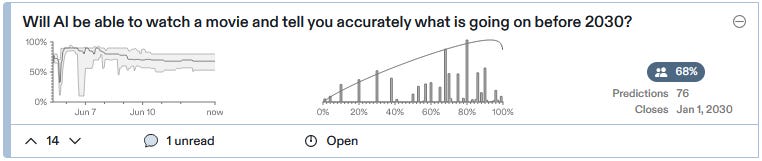

Matthew Barnett kindly added all of these to Metaculus, with the following results:

So who will win the bet?

Metaculus thinks probably Musk - except that he has yet to agree to it. If someone else with a spare $500K wanted to jump in, it looks like in expectation they would make some money.

Related:

Okay, I should have cited this one in my recent debate. It resolves positive if experts believe that either “all” or “most” of the first AGI is based on deep learning (ie the current AI paradigm). I wasn’t able to get Marcus to give me a clear estimate on this one because he was understandably doubtful about our ability to quantify “most”, but I think this backs my position that it’s a pretty big deal and a major step on the path.

This Week On The Markets

1:

Wild ride, huh? It would be a fun troll to do whatever you have to in order to keep this market at exactly 50%. For all I know maybe that’s what Musk is doing.

2:

I hadn’t heard of this guy before, but he’s a billionaire who was a Republican until this year - not the kind of guy I usually think of Angelenos as electing! But the polls all show he’s behind, and PredictIt - which is usually really good for US elections - has him at 31%:

I think Metaculus is just wrong here, and have bet against.

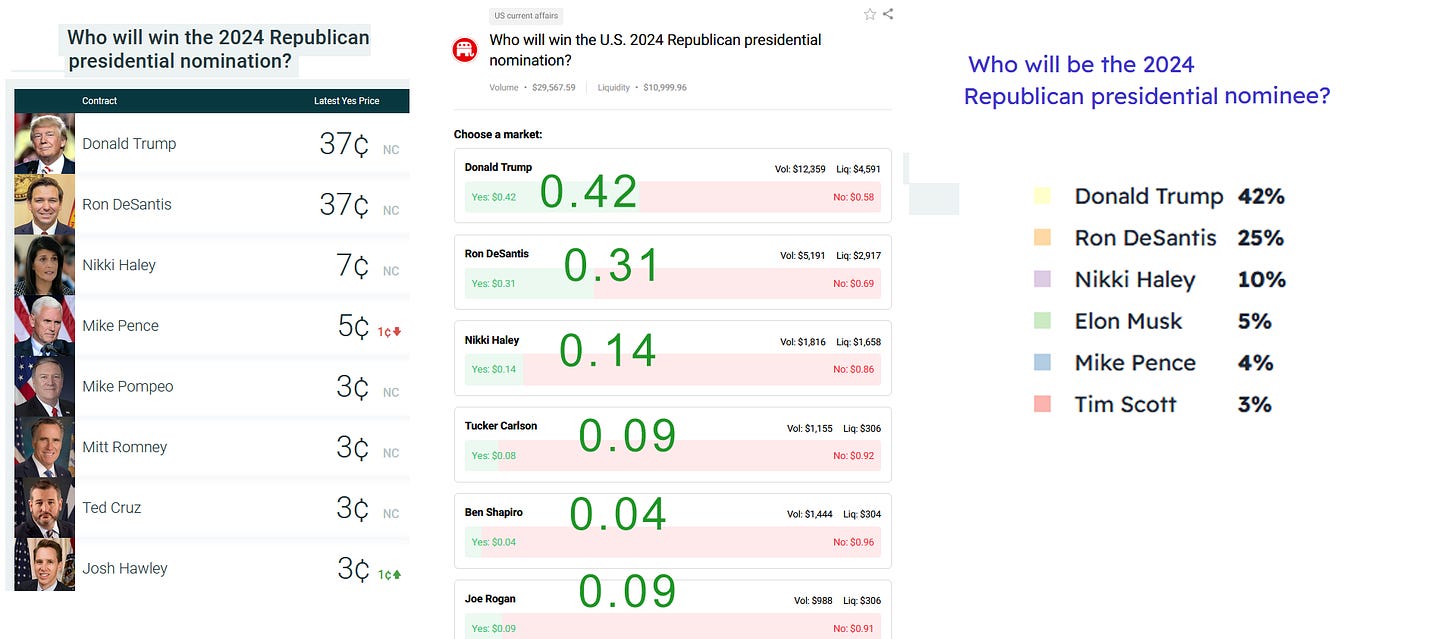

3: Here’s presidential nominees on PredictIt ($13,000,000 in liquidity), Polymarket ($30,000), and Manifold ($M3170):

PredictIt looks good, Manifold looks okay, Polymarket seems to have a long tail of implausible vanity candidates stuck around the 10% level.

4:

This is crazy and over-optimistic, right?

5: Finally, last month I started markets on the Supreme Court leak, all of which got decent volume. Will the leaker’s identity be known by 2023? is at 53%, Will Congress legalize abortion nationally in the next five years? is at 9%, and Will Congress ban abortion nationally in the next five years? is at 7%.

Shorts

1: Andrew Eaddy and Clay Graubard gave a talk about prediction markets at big crypto conference Consensus in Austin earlier this month.

2: Bloomberg has an article on Kalshi: A New Prediction Market Lets Investors Bet Big on Almost Anything. Great explanation of why regulated prediction markets are so hard, and what kinds of things they can or can’t do. Key quotes: “One day during their time at Y Combinator, Lopes Lara and Mansour say, they cold-called 60 lawyers they’d found on Google. Every one of them said to give up”. Also “the [Division of Market Oversight] was set up to deal with exchanges that might create two or three new markets a year. Kalshi’s business model called for new ones practically every day.”

3: Chris of Karlstack writes about his quest to dectuple his $1000 investment on prediction markets.

4: Gregory Lewis on the EA Forum on something I got wrong a few months ago: rational predictions often update predictably. Related: is Metaculus slow to update? (probably no)

5: The Atlantic on Why So Many COVID Predictions Were Wrong.

Yes, this was a boring Mantic Monday post. There’s lots of exciting stuff waiting in the wings, but nobody has gone public yet, so it’ll have to wait for July.