Mantic Monday 8/28/23

Superconductor Autopsy

Sorry guys, LK-99 doesn’t work. The prediction markets have dropped from highs in the 40s down to 5 - 10. It’s over.

What does this tell us about prediction markets? Were they dumb to ever believe at all? Or were they aggregating the evidence effectively, only to update after new evidence came in?

I claim they were dumb. Although the media was running with the “maybe there’s a room-temperature superconductor” story, the smartest physicists I knew were all very skeptical. The markets tracked the level of media hype, not the level of expert opinion. Here’s my evidence:

First, the simplest proof that something was predictable is to have predicted it. Since I know you’ll ask, yes, I bet on the markets at the time - 10,000 mana on Manifold and $100 on Kalshi - and made a nice profit. I would have bet more on Kalshi but it took too long to load the money onto my account.

Second, on Manifold, the biggest NO bets were superforecasters, people on the leaderboards, and rationalist celebrities; the biggest YES bets were randos with none of those qualifications.

NinthCause and SG are Manifold co-founders. Jack, Marcus Abramovich, and Michael Wheatly are Manifold leaderboard record holders. Peter Wildeford is a superforecaster who came near the top in the ACX forecasting contest. Matthew Barnett works in AI forecasting. You all know Eliezer and Zvi. As far as I can tell nobody high up on the YES side is similarly illustrious.

But prediction markets are supposed to ensure you don’t have to resort to name-dropping, so how did this go wrong? I was tempted to blame Manifold-specific factors, like the ability to get starting mana instead of putting skin in the game. But real-money markets Polymarket and Kalshi got approximately the same results:

Polymarket: https://polymarket.com/event/is-the-room-temp-superconductor-real

Polymarket: https://polymarket.com/event/is-the-room-temp-superconductor-real

Kalshi: https://kalshi.com/markets/supercon/roomtemp-superconductor-reported

Kalshi: https://kalshi.com/markets/supercon/roomtemp-superconductor-reported

Both reached the 40s to 50s!

I think there just wasn’t enough smart money to drown out the people who wanted to bet on an exciting thing being true, or who were unduly influenced by a social media environment optimized to keep their attention by convincing them that an exciting thing was true.

I have never claimed prediction markets are always good. All I wrote in the Prediction Market FAQ was that either a prediction market will be good, or you could make lots of free money. In this case, it was the second one. I regret I only made $30.

I do hope this situation will improve over time, as over-eager forecasters get burned and dollars flow from dumb money to smarter.

[EDIT: I should have included something about Metaculus here, but it’s confusing. I think the most popular Metaculus market was lower because it had stricter resolution criteria (thefirst replication had to be positive, instead of any replication) but that otherwise Metaculus raw probabilities mirrored everyone else’s. We don’t know how their algorithmically processed probabilities did yet and I’ll report on that information when I get it.]

Salem/CSPI Tournament Winners

The Salem Center and the Center For The Study Of Partisanship And Ideology, two think tanks associated with right-wing intellectual Richard Hanania, sponsored a prediction market tournament last year. Participants got $1000 in play money to bet on selected markets about current events; winners would be interviewed for a well-paying academic sinecure at one of the think tanks.

Now the tournament is over. Winners have yet to be announced, but unofficially, everyone knows who they are:

First place out of 999 participants is **zubbybadger. **Zubby is a prediction market veteran who was featured in a Washington Monthly article last year for his great track record in political betting (he’s made > $150,000 on PredictIt). Now he works as a “community manager” for Kalshi (I don’t know what this entails).

Second place was RobertfromConsiderations On Codecrafting. He’s written a detailed reflection on his experience (part one, part two) which is my main source for this section and highly recommended. He describes himself as “having absolutely no experience with prediction markets”.

Third place was Johnny Ten-Numbers , about whom I can find no further information.

You can see the rest of the top 20 at the very bottom of this post.

Reading Robert’s story of his experience, I’m struck by how little of the competition at the top was about predictive accuracy. Everyone in the top 20 was a very accurate predictor (Exactly equally accurate? Hard to tell.) What separated 1st place from 20th, aside from luck, was things like:

-

Ability to move fast - both in responding to news, and in taking the other side of bad bets. Several top performers programmed bots to give them an edge here.

-

Strategy about where to park your money. Top performers focused on markets that would resolve quickly, so they could invest their winnings in some other market and compound faster. This led to a lot of strategy about when to exit a market, even if you were pretty sure it would keep going further in your direction.

-

Compete with other players. If someone was ahead of you, you might want to anticorrelate your bets with theirs. After all, if they won all their bets, they would beat you no matter what. But if they got unlucky, then you might have a chance!

-

Predicting other players. The market can remain irrational longer than you can remain solvent. If you want to profit quickly (as above), you might want to predict whether someone who’s lost money on a particular market will double down vs. try to cut their losses, so you know which direction the market will move next.

-

Rules-lawyering the resolution criteria, as always.

Reading Robert’s logs made me more convinced than ever that the winners are probably brilliant people who deservedly won this 5-dimensional chess game. But only some of their brilliance is concentrated in prediction per se. Seems bad, and makes me think traditional forecasting tournaments beat Salem-style prediction market tournaments at identifying superforecasters.

Congratulations to all winners and participants. Salem still has to decide who gets the research fellowship (I think Robert can win over Richard Hanania by bonding over their shared hobby of writing extremely long things); I’ll report on that when it happens.

Prediction Portfolios

Suppose you think AI’s gonna be really big. You think everyone else is underestimating AI. You don’t have any special knowledge of what, in particular, will be big about it. You just think it’s gonna be really big.

It’s hard to turn that into a prediction market thesis. Will AI win the Mathematics Olympiad in 2024? Maybe math is not the particular thing AI will be good at, or maybe 2024 is too early. Will AI write a best-selling novel? Maybe novel-writing isn’t where AI will shine. Will AI be used in the military? Maybe AI will be great but the military will ban it for political reasons. You’re not sure about any of this. You just think AI is gonna be really big.

Enter prediction portfolios:

You can invest in “AI Advances by 2025”, a collection of 8 different questions about whether AI will move fast or slow.

If you think the Democrats will do well in 2024, but you’re not sure which particular election they’ll do well in, you can bet on “2024: Bullish on Blue”, which combines 205 different predictions about Democrats doing well.

This is a lot like mutual funds in the normal stock market, where you can buy eg the Israel Biotech Fund because you think Israeli biotech is going to be big but you don’t want to bet on any specific company. It seems like a natural development in the prediction economy and I’m glad to see it happening.

Wingman.WTF

…that’s its real domain name, wingman.wtf. It claims to be a prediction market for whether plane flights will be late.

If I understand the basic idea, it automatically creates a market for every major flight in the next three days, uses an algorithm to calculate delay chances, and then lets people who think they have extra information (not included in the algorithm) bet the probability up or down. It’s all in crypto (using the MATIC token), so it can probably escape regulatory scrutiny at least for a while.

This is actually a cute idea; I think it incentivizes travelers (and maybe airline employees and amateur algorithmic traders) to give them as much up-to-the-minute information as possible about flight times.

But what’s their game here? This might be useful for travelers, but how does Wingman itself make money? In fact, shouldn’t we expect it to lose money, since it’s algorithmically generating predictions and then encouraging people who know more to bet against them?

I can’t tell. It doesn’t seem to have obvious trading fees, although I haven’t tried using it myself. The most honorable business plan would be to eventually syndicate its data to airlines or travel agents or someone else who cares a lot about flight delays. The least honorable business plan would be any of the many varieties of crypto scam that have been pioneered over the past few decades.

The picture above shows that the biggest markets have between 3 and 30 MATIC in them, which corresponds to $1.50 - $15 at current prices.

This Month In The Markets

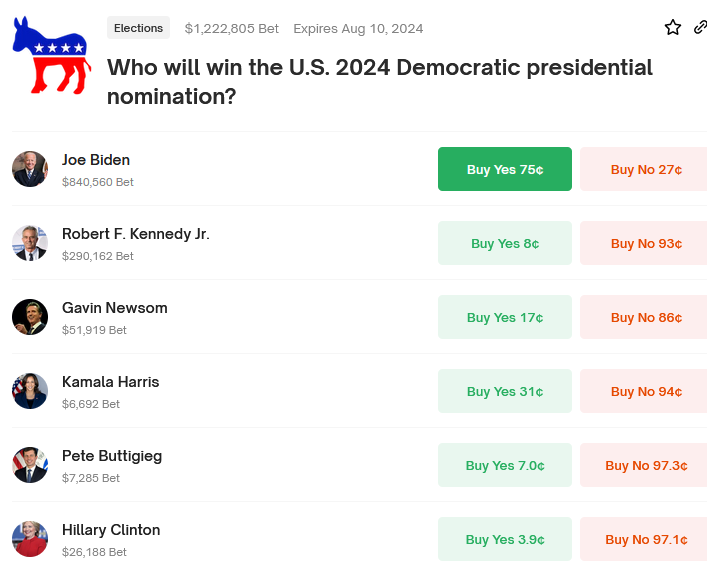

1: We’re probably getting to that point in the cycle when we’re going to have to include these every month, aren’t we?

Source: https://polymarket.com/event/who-will-win-the-us-2024-democratic-presidential-nomination

Source: https://polymarket.com/event/who-will-win-the-us-2024-democratic-presidential-nomination

Source: https://polymarket.com/event/who-will-win-the-us-2024-republican-presidential-nomination

Source: https://polymarket.com/event/who-will-win-the-us-2024-republican-presidential-nomination

I think Newsom and maybe RFK are overpriced, everything else here seems reasonable.

2: Polymarket has started including some more “whimsical” questions, for example:

Source: https://polymarket.com/event/will-jesus-christ-return-by-august-31

Source: https://polymarket.com/event/will-jesus-christ-return-by-august-31

I think this is valuable! We’ve learned that Polymarket (and maybe real money markets in general) are capable of driving a definitely-not-going-to-happen market down to zero (technically $0.02). I’ve never seen that happen before! Also that $5,283 worth of people will invest in a market on the Second Coming of Christ.

I assume there’s nothing special about August 31 and Polymarket will have one of these every month. That means if you’re Christian, you have to believe that one of these days there will be a version of this that resolves positive. Imagine how annoying it would be to have bought “NO” that month!

Also: “The resolution source for this market will be a consensus of credible sources.”

3: RIP Yevgeny:

4:

5:

6:

Short Links

1: Not a prediction market per se, but British bookies were offering bets on how much Donald Trump will weigh at his arraignment.

2:This season’s grants from EA charity Long Term Future Fund include $71,000 to Solomon Sia for “providing consultation and recommendations on changes to the US regulatory environment for prediction markets”.

3: Existential risk expert Toby Ord responds to FRI’s Existential Risk Persuasion Tournament disagreeing with him.

4: Jacob Steinhardt reviews the first two years of AI forecasting:

Overall, here is how I would summarize the results: Metaculus and I did the best and were both well-calibrated, with the Metaculus crowd forecast doing slightly better than me. The AI experts from Karger et al. did the next best. They had similar medians to me but were (probably) overconfident in the tails. The superforecasters from Karger et al. did the next best. They (probably) systematically underpredicted progress. The forecasters from Hypermind did the worst. They underpredicted progress significantly on MMLU.

5: The Manifest prediction market conference September 22 - 24 is still going on, now with planned activities and several more Guests Of Honor. I’m especially excited to see Shayne Coplan, CEO of Polymarket, who’s had interesting thoughts the last few times I’ve gotten to speak with him: