Moderation Is Different From Censorship

This is a point I keep seeing people miss in the debate about social media.

Moderation is the normal business activity of ensuring that your customers like using your product. If a customer doesn’t want to receive harassing messages, or to be exposed to disinformation, then a business can provide them the service of a harassment-and-disinformation-free platform.

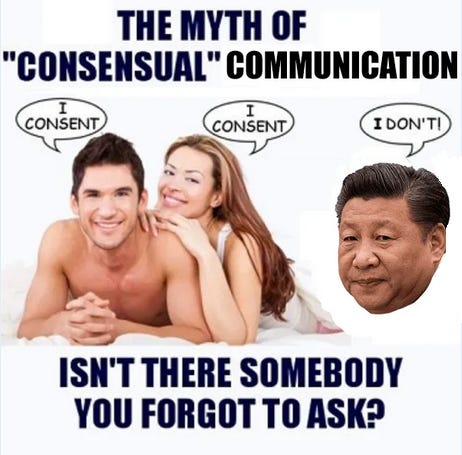

Censorship is the abnormal activity of ensuring that people in power approve of the information on your platform, regardless of what your customers want. If the sender wants to send a message and the receiver wants to receive it, but some third party bans the exchange of information, that’s censorship.

The racket works by pretending these are the same imperative. “Well, lots of people will be unhappy if they see offensive content, so in order to keep the platform safe for those people, we’ve got to remove it for everybody.”

This is not true at all. A minimum viable product for moderation without censorship is for a platform to do exactly the same thing they’re doing now - remove all the same posts, ban all the same accounts - but have an opt-in setting, “see banned posts”. If you personally choose to see harassing and offensive content, you can toggle that setting, and everything bad will reappear. To “ban” an account would mean to prevent the half (or 75%, or 99%) of people who haven’t toggled that setting from seeing it. The people who elected to see banned posts could see them the same as always. Two “banned” accounts could still talk to each other, retweet each other, etc - as could accounts that hadn’t been banned, but had opted into the “see banned posts” setting.

Does this difference seem kind of pointless and trivial? Then imagine applying it to China. If the Chinese government couldn’t censor - only moderate - the world would look completely different. Any Chinese person could get accurate information on Xinjiang, Tiananmen Square, the Shanghai lockdowns, or the top fifty criticisms of Xi Jinping - just by clicking a button on their Weibo profile. Given how much trouble ordinary Chinese people go through to get around censors, probably many of them would click the button, and then they’d have a free information environment. This switch might seem trivial in a well-functioning information ecology, but it prevents the worst abuses, and places a floor on how bad things can get.

And this is just the minimum viable product, the case I’m focusing on to forestall objections of “this would be too hard to implement” or “this would be too complicated for ordinary people to understand”. If you wanted to get fancy, you could have a bunch of filters - harassing content, sexually explicit content, conspiracy theories - and let people toggle which ones they wanted to see vs. avoid. You could let people set them to different levels. Set your anti-Semitism filter to the weakest setting and it will only block literal Nazis with swastikas in their profile pic; set it to Ludicrous, and it will block anyone who isn’t an ordained Orthodox rabbi. Or you could let users choose which fact-checking organization they trusted to flag content as “disinformation”.

The current level of moderation is a compromise. It makes no one happy. Allowing more personalized settings would make the free speech side happier (since they could speak freely to one another and anyone else interested in hearing what they had to say). And it would make the avoid-harassment side happier, since they could set their filters to stronger than the default setting, and see even less harassment than they do now.

This doesn’t solve all our problems. There are some genuine arguments for true censorship: that is, for blocking speech that both sides want to hear. For example:

-

That it’s a social good to avert the spread of false ideas (and maybe even some true ideas that people can’t handle). People might want to hear these ideas (“What? Joe Biden is a lizard person spy? I hadn’t heard anything about that on the so-called mainstream media!”) but they should not be allowed to.

-

That certain acts of communication - like bomb-making instructions and child porn - don’t qualify as “ideas” per se and should not be shared even if we are committed to to the free flow of information. Pedophiles may want to share child porn with each other; terrorists may want to share bomb-making instructions with each other - but we shouldn’t let them.

-

That people you consider bad (Nazis, Communists, Chinese pro-democracy activists) could discuss their bad ideas with each other, recruit other people, become well-organized, and then overthrow your your society.

I’m less sympathetic to these arguments than most people are, but I can’t deny they sometimes have value. They ought to be debated. Understanding the difference between moderation and censorship won’t end that debate.

But my point is: nobody is debating these arguments now, because they don’t have to. Proponents of censorship have decided it’s easier to conflate censorship and moderation, and then argue for moderation. The solution is to de-conflate these two things - preferably by offering moderation too cheap to meter. Then censorship proponents can argue for why we still need censorship even above and beyond this, and everyone can listen to the arguments and decide whether or not they’re worth it.