We're Not Platonists, We've Just Learned The Bitter Lesson

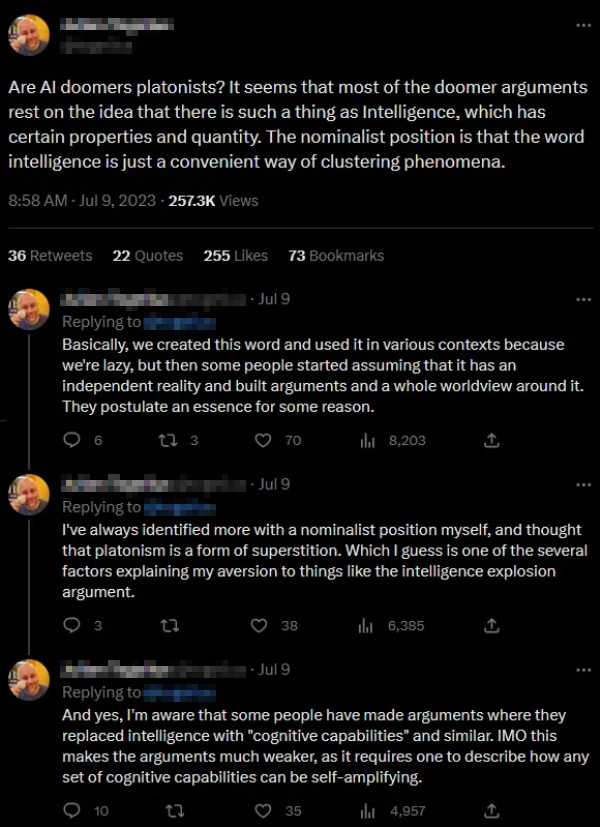

Seen on Twitter:

Intelligence explosion arguments don’t require Platonism. They just require intelligence to exist in the normal fuzzy way that all concepts exist.

First, I’ll describe what the normal way concepts exist is. I’ll have succeeded if I convince you that claims using the word “intelligence” are coherent and potentially true.

Second, I’ll argue, based on humans and animals, that these coherent-and-potentially-true things are actually true.

Third, I’ll argue that so far this has been the most fruitful way to think about AI, and people who try to think about it differently make worse AIs.

Finally, I’ll argue this is sufficient for ideas of “intelligence explosion” to be coherent.

1: What’s The Normal Way That Concepts Exist?

Concepts are bundles of useful correlations.

Consider the claim “Mike Tyson is stronger than my grandmother”. This doesn’t necessarily rely on a Platonic essence of Strength. It just means things like:

-

Mike Tyson could beat my grandmother at lifting free weights

-

Mike Tyson could beat my grandmother at using a weight machine

-

Mike Tyson could beat my grandmother in boxing

-

Mike Tyson could beat my grandmother in wrestling

-

Mike Tyson has better bicep strength than my grandmother

-

Mike Tyson has better grip strength than my grandmother

Each of these might decompose into even more sub-sub-claims. For example, the last one might decompose into:

-

Mike Tyson has better grip strength than my grandmother, tested in such-and-such a way, on such-and-such a date.

-

Mike Tyson has better grip strength than my grandmother, tested in some other way, on some other date.

We don’t really distinguish all of these claims in ordinary speech because they’re so closely correlated that they’ll probably all stand or fall together.

Sometimes that’s not true. Is Mike Tyson stronger or weaker than some other very strong person like Arnold Schwarzenegger? Maybe Tyson could win at boxing but Schwarzenegger could lift more weights, or Tyson has better bicep strength but Schwarzenegger has better grip strength. Still, the correlations are high enough that “strength” is a useful shorthand that saves time / energy / cognitive load over always discussing every subclaim individually. In fact, there are a potentially infinite number of subclaims (could Mike Tyson lift an alligator more quickly than Arnold Schwarzenegger while standing on one foot in the Ozark Mountains?) so we have to use shorthands like “strength” to have discussions in finite time.

If somebody learned that actually arm strength was totally uncorrelated with grip strength, and neither was correlated with ability to win fights, then they could fairly argue that “strength” was a worthless concept that should be abandoned. On the opposite side, if somebody tried to argue that Mike Tyson was objectively stronger than Arnold Schwarzenegger, they would be reifying the concept of “strength” too hard, taking it further than it could go. But absent this kind of mistake, “strength” is useful and we should keep talking about it.

“Intelligence” is another useful concept. When I say “Albert Einstein is more intelligent than a toddler”, I mean things like:

-

Einstein can do arithmetic better than the toddler

-

Einstein can do complicated mathematical word problems better than the toddler

-

Einstein can solve riddles better than a toddler

-

Einstein can read and comprehend text better than a toddler

-

Einstein can learn useful mechanical principles which let him build things faster than a toddler

…and so on.

Just as we can’t objectively answer “who is stronger, Mike Tyson or Arnold Schwarzenegger?”, we can’t necessarily answer “who is smarter, Einstein or Beethoven?”. Einstein is better at physics, Beethoven at composing music. But just as we can answer questions like “Is Mike Tyson stronger than my grandmother”, we can also answer questions like “Is Albert Einstein smarter than a toddler?”

1.1: Why Is A Concept Like Strength Useful?

Why is someone with more arm strength also likely to have more leg strength?

There are lots of specific answers, for example:

-

Healthier people, and people in their prime, have more of all kinds of strength, because age introduces cell- and tissue-level errors that make all muscles function less effectively.

-

People exposed to lots of testosterone or exogenous steroids are stronger than people without them, because these encourage all muscles to grow.

-

Some people are athletes or body-builders, and they’re really into increasing all facets of strength as much as possible, and these people will have more of all facets of strength than people who don’t have this interest.

A more general answer might be that arm muscles are similar enough to leg muscles, and linked enough by being in the same body, that overall we expect their performance to be pretty correlated.

2: Do The Assumptions That Make “Intelligence” A Coherent Concept Hold?

All human intellectual abilities are correlated. This is the famous g , closely related to IQ. People who are good at math are more likely to be good at writing, and vice versa. Just to give an example, SAT verbal scores are correlated 0.72 with SAT math scores.

These links don’t always hold. Some people are brilliant writers, but can’t do math to save their lives. Some people are idiot savants who have low intelligence in most areas but very high skill in one. But that’s what it means to have a correlation of 0.72 instead of 1.00.

It can be surprising both how much everything is correlated and how little everything is correlated. For example, Garry Kasparov, former chess champion, took an IQ test and got 135. You can think of this two different ways:

-

“Wow, someone who’s literally the best chess player on earth only has a pretty high (as opposed to fantastically high) IQ, probably lower than some professors at the local university. It’s amazing how poorly-correlated intellectual abilities can be.”

-

“Wow, someone who was selected only for being good at chess still has an IQ in the 99th percentile! It’s amazing how well-correlated all intellectual abilities are.”

I think both of these are good takeaways.

Compare the 0.72 verbal/math correlation with the 0.76 dominant-hand/non-dominant hand grip strength correlation and I think intelligence is a useful concept in the same way strength is.

But also, humans are better at both the SAT verbal and the SAT math than chimps, cows, or fish. And GPT-4 is better at both those tests than GPT-3 or GPT-2. It seems to be a general principle that people, animals, or artifacts who are better at the SAT math are also better at the SAT verbal.

2.1: Why Is A Concept Like Intelligence Useful?

Across different people, skill at different kinds of intellectual tasks are correlated. Partly this is for prosaic reasons, like:

-

Some people get better education, and end up more skilled in everything that gets taught in school.

-

Some people are healthier, or were better nourished as children, or were exposed to less lead, and that helps all of their different intellectual faculties.

-

Some people have better test-taking skills, and that makes them test better on tests of any subject.

-

Some people are too young and have worse-developed brains. But other people are too old, and have started getting dementia.

But these skills are also correlated for more fundamental reasons. Variation in IQ during adulthood is about 70% genetic. A lot of this seems to have to do with literal brain size (which is correlated with intelligence at about 0.2) and with myelination of neurons (which is hard to measure but seems important).

These considerations become even more relevant when you start comparing different species. Humans are smarter than chimps in many ways (although not every way!) Likewise, chimps are smarter than cows, cows are smarter than frogs, et cetera. Research by Suzana Herculano-Houzel and others suggests this is pretty directly a function of how many neurons each animal has, with maybe some contribution from how long a childhood they have to learn things.

Some animals are very carefully specialized for certain tasks - for example, birds can navigate very well by instinct. But when this isn’t true, their skill at a wide range of cognitive abilities depends on how big their brain is, how much of a chance they have to learn things, and how efficient the interconnections between brain regions are.

Just as there are biological reasons why arm strength should be related to leg strength, there are biological reasons why different kinds of intellectual abilities should be related to each other.

3: Intelligence Has Been A Very Fruitful Way To Think About AI

All through the 00s and 10s, belief in “intelligence” was a whipping boy for every sophisticated AI scientist. The way to build hype was to accuse every other AI paradigm except yours of believing in “cognition” or “intelligence”, a mystical substance that could do anything, whereas your new AI paradigm realized that what was really important was emotions / embodiment / metaphor / action / curiosity / ethics . Therefore, it was time to reject the bad old AI paradigms and switch to yours. This announcement was usually accompanied by discussion of how belief in “intelligence” was politically suspect, or born of the believer’s obsession with signaling that they were Very Intelligent themselves. In Rodney Brooks’ famous term, they were “computational bigots”.

When they weren’t proposing new anti-intelligence paradigms, the Responsible People were emphasizing how rather than magical intelligence, AI would need slow, boring work on the nitty gritty of computation - things like linguists applying their expert domain knowledge to natural language processing, or neuroscientists who really understood how the visual cortex worked slowly designing algorithms for robot vision. The idea of “intelligence” was a mirage that you could skip all of this hard work, just magic in a lump of “intelligence” and solve problems without understanding them. It was like thinking a car was made of “horsepower”, and as long as you crammed enough horsepower (whatever that was) into the front hood, you didn’t need to worry about complicated stuff like pistons or spark plugs or catalytic converters.

In the middle of a million companies pursuing their revolutionary new paradigms, OpenAI decided to just shrug and try the “giant blob of intelligence” strategy, and it worked. They’re not above gloating a little; when they wanted to prove GPT-4 could understand comics, this was the comic they chose:

Computer scientist Richard Sutton calls this the Bitter Lesson - that extremely clever plans to program “true understanding” into AI always do worse than just adding more compute and training data to your giant compute+training data blob. It’s related to Jelinek’s Law, named after language-processing AI pioneer Frederick Jelinek: “Every time I fire a linguist, the performance of the speech recognizer goes up.” The joke is that having linguists on your team means you’re still trying to hand-code in deep principles of linguistics, instead of just figuring out how to get as big a blob of intelligence as possible and throw language at it. The limit of Jelinek’s Law is OpenAI, who AFAIK didn’t use insights from linguistics at all, and so made an AI that uses language near-perfectly.

Why does this work so well? Because animal intelligence (including human intelligence) is a blob of neural network arranged in a mildly clever way that lets it learn whatever it wants efficiently. The bigger the blob and the cleverer the arrangement, the faster and more thoroughly it learns. Trying to second-guess your blob by hand-coding stuff in doesn’t work as well (it might work for evolution sometimes, like bird migration instincts, but it probably won’t work for you).

The bigger your blob, the cleverer its arrangement, and the more training data you give it, the better it’s likely to perform on a very wide variety of cognitive tasks. This explains why chimps are smarter than cows, why Einstein is smarter than you, and why GPT-4 is smarter than GPT-2. The correlations won’t be perfect, any more than strength correlations are perfect. But they’ll be useful enough to talk about.

I think if you get a very big blob, arrange it very cleverly, and give it lots and lots of training data, you’ll get something that’s smarter than humans in a lot of different ways. In every way? Maybe not: humans aren’t even smarter than chimps in every way. But smarter in enough ways that the human:chimp comparison will feel appropriate.

3.1: This Is Enough For An “Intelligence Explosion” To Be A Coherent Concept

Suppose that someone put steroids in a box with a really tight lid. You need to be very strong to get the lid off the box. But once you do, you can take the steroids.

This is enough to cause a “strength explosion”, in the sense that there’s some amount of strength that lets you become even stronger. It’s not a very interesting example. But that’s an advantage! People tend to get all mystical and philosophical about this stuff. I think the best way to think about it is with the commonest of common sense.

There have already been intelligence explosions. Long ago, humans got smart enough to invent reading and writing, which let them write down training data for each other and become even smarter (in a practical sense; this might or might not have raised their literal IQ, depending on how you think about the Flynn Effect). Later on, we invented iodine supplementation, which let us treat goiter and gain a few IQ points on a population-wide level. There’s nothing mystical about any of this. Once you get smart enough, you can do things that make you even smarter.

AI will be one of those things. We already know that bigger blobs of compute with more training data can do more things in correlated ways - frogs are outclassed by cows, chimps, and humans; toddlers are outclassed by Einstein; GPT-2 is outclassed by GPT-4. At some point we might get a blob which is better than humans at designing chips, and then we can make even bigger blobs of compute, even faster than before.

Ten years ago, I asked people to Beware Isolated Demands For Rigor. When you’re dealing with topics in Far Mode, it’s tempting to get all philosophical - what if matter doesn’t exist? What if everything’s an illusion? Instead, I recommend thinking about future intelligence explosions in Near Mode, in which superintelligent machines are no philosophically different than machines that are very very big.

This only suggests that an intelligence explosion is coherent, not that it will actually happen; see Davidson On Takeoff Speed for an argument why it might.