Why Not Slow AI Progress?

The Broader Fossil Fuel Community

Imagine if oil companies and environmental activists were both considered part of the broader “fossil fuel community”. Exxon and Shell would be “fossil fuel capabilities”; Greenpeace and the Sierra Club would be “fossil fuel safety” - two equally beloved parts of the rich diverse tapestry of fossil fuel-related work. They would all go to the same parties - fossil fuel community parties - and maybe Greta Thunberg would get bored of protesting climate change and become a coal baron.

This is how AI safety works now. AI capabilities - the work of researching bigger and better AI - is poorly differentiated from AI safety - the work of preventing AI from becoming dangerous. Two of the biggest AI safety teams are at DeepMind and OpenAI, ie the two biggest AI capabilities companies. Some labs straddle the line between capabilities and safety research.

Probably the people at DeepMind and OpenAI think this makes sense. Building AIs and aligning AIs could be complementary goals, like building airplanes and preventing the airplanes from crashing. It sounds superficially plausible.

But a lot of people in AI safety believe that unaligned AI could end the world, that we don’t know how to align AI yet, and that our best chance is to delay superintelligent AI until we do know. Actively working on advancing AI seems like the opposite of that plan.

So maybe (the argument goes) we should take a cue from the environmental activists, and be hostile towards AI companies. Nothing violent or illegal - doing violent illegal things is the best way to lose 100% of your support immediately. But maybe glare a little at your friend who goes into AI capabilities research, instead of getting excited about how cool their new project is. Or agitate for government regulation of AI - either because you trust the government to regulate wisely, or because you at least expect them to come up with burdensome rules that hamstring the industry. While there are salient examples of government regulatory failure, some regulations - like the EU’s ban on GMO or the US restrictions on nuclear power - have effectively stopped their respective industries.

This is the most common question I get on AI safety posts: why isn’t the rationalist / EA / AI safety movement doing this more? It’s a great question, and it’s one that the movement asks itself a lot - see eg What An Actually Pessimistic AI Containment Strategy Looks Like and Slowing Down AI Progress Is An Underexplored Alignment Strategy.

Still, most people aren’t doing this. Why not?

No, We Will Not Stop Hitting Ourselves

First, a history lesson: the best AI capabilities companies got started by AI safety proponents, for AI safety related reasons.

DeepMind was co-founded by Shane Legg, a very early AI safety proponent who did his 2007 PhD thesis on superintelligence

Shane Legg @ShaneLeggEver since the early days of my PhD, AI safety has been a personal passion of mine. I hope this resource will be one of many ways that we continue to engage with the safety-interested community as we collectively build towards safe and beneficial AI.

Shane Legg @ShaneLeggEver since the early days of my PhD, AI safety has been a personal passion of mine. I hope this resource will be one of many ways that we continue to engage with the safety-interested community as we collectively build towards safe and beneficial AI.  medium.comBuilding safe artificial intelligence: specification, robustness, and assuranceBy Pedro A. Ortega, Vishal Maini, and the DeepMind safety team[4:06 PM ∙ Sep 27, 2018

medium.comBuilding safe artificial intelligence: specification, robustness, and assuranceBy Pedro A. Ortega, Vishal Maini, and the DeepMind safety team[4:06 PM ∙ Sep 27, 2018

323Likes104Retweets](https://twitter.com/ShaneLegg/status/1045343927442841602)

…and by Demis Hassabis, who has said things like:

Potentially. I always imagine that as we got closer to [superintelligence] , the best thing to do might be to pause the pushing of the performance of these systems so that you can analyze down to minute detail exactly and maybe even prove things mathematically about the system so that you know the limits and otherwise of the systems that you’re building. At that point I think all the world’s greatest minds should probably be thinking about this problem. So that was what I would be advocating to you know the Terence Tao’s of this world, the best mathematicians. Actually I’ve even talked to him about this—I know you’re working on the Riemann hypothesis or something which is the best thing in mathematics but actually this is more pressing.

Speculatively, DeepMind hoped to get all the AI talent in one place, led by safety-conscious people, so that they could double-check things at their leisure instead of everyone racing against each other to be first. I don’t know if these high ideals still hold any power; corporate parent Google has been busy stripping them of autonomy.

OpenAI is the company behind GPT-3 and DALL-E. The media announced them as Elon Musk Just Founded A New Company To Make Sure Artificial Intelligence Doesn’t Destroy The World. The same article quotes co-founder and current OpenAI CEO Sam Altman as saying that “AI will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies”. OpenAI’s public statement on its own foundation said:

It’s hard to fathom how much human-level AI could benefit society, and it’s equally hard to imagine how much it could damage society if built or used incorrectly.

But they can’t use the “trying to avoid a race” argument - their creation probably started a race (against DeepMind). So what were they thinking? I still haven’t fully figured this out, but here’s Altman’s own explanation:

Sam Altman @samaeither we figure out how to make AGI go well or we wait for the asteroid to hit[7:39 PM ∙ Jun 25, 2022

Sam Altman @samaeither we figure out how to make AGI go well or we wait for the asteroid to hit[7:39 PM ∙ Jun 25, 2022

782Likes60Retweets](https://twitter.com/sama/status/1540781762241974274)

Toby Ord (here standing in for the broader existential-risk-quantifying community) has estimated the risk of extinction-level asteroid impacts as 0.0001% per century, and the risk of extinction from building AI too fast as 10%. So as written, this argument isn’t very good. But you could revise it to be about metaphorical “asteroids” like superplagues or nuclear war. Altman has also expressed concern about AI causing inequality, for example if rich people use it to replace all labor and reap all the gains for themselves. OpenAI was originally founded as a nonprofit in a way that protected against that, so maybe he thought that made it preferable to DeepMind.

(As for Musk, I don’t think we need any explanation deeper than his usual pattern of doing cool-sounding things on impulse, then regretting them at leisure.)

Anthropic was founded when some OpenAI safety researchers struck out on their own to create what they billed as an even-more-safety-conscious alternative. Again, the headline was Anthropic Raises $580 Million For AI Safety And Research (and most of that came from rationalists and effective altruists convinced by their safety-conscious pitch). Again, their announcement included reassuring language - their president said that “We’re focusing on ensuring Anthropic has the culture and governance to continue to responsibly explore and develop safe AI systems as we scale.” Clued-in people disagree about whether Anthropic has already pivoted to building the Torment Nexus, but it’s probably only a matter of time.

Why this history lesson? Partly to highlight the depth of the AI alignment people’s involvement here. It’s not just that they’re not fighting AI companies, it’s that they keep creating them and leading investment in them. But also…

We Need To Talk About Race

The AI policy people’s big fear is a “race dynamic”. Many profit-seeking companies (or power-seeking governments) compete to be first to reach AGI. Although they might invest in some basic safety measures necessary to make AIs work at all, they would have little time to worry about longer-term or more theoretical concerns.

Much more pleasant to contemplate is a single team establishing a clear lead. They could study safety at their own pace, and wouldn’t have to deploy anything until they were confident it would work. This would be a boon not only for existential-risk-prevention, but for algorithmic fairness, transparent decision-making, etc. So it would be nice if the leading teams stayed leading.

Suppose that the alignment community, without thinking it over too closely, started a climate-change-style campaign to shame AI capabilities companies. This would disproportionately harm the companies most capable of feeling shame. Right now, that’s DeepMind, OpenAI, and Anthropic - the companies that have AI safety as part of their culture, cross-pollinate with AI safety proponents, and take our arguments seriously.

203Likes25Retweets](https://twitter.com/sama/status/1534668132152770560)

If all these places slowed down - either because their leadership saw the light, or because their employees grumbled and quit - that would hand the technological lead to the people just behind them - like Facebook and Salesforce - who care less about safety. So which would we prefer? OpenAI gets superintelligence in 2040? Or Facebook gets superintelligence in 2044?

If OpenAI gets superintelligence in 2040, they’ll probably be willing to try whatever half-baked alignment measures researchers have figured out by then, even if that adds time and expense. Meanwhile, Mark Zuckerberg says that AI will be fine and that warning people about existential risk is “irresponsible”.

(of course, 2040 and 2044 are made-up numbers. Maybe the real numbers are 2030 vs. 2060, and those extra thirty years would mean an alignment technology revolution so comprehensive that even skeptics would pay attention…)

Xi-Risks

Maybe we need to think bigger. Might we be able to get the government to regulate AI so heavily that it slows down all research?

Sometime before AI becomes an existential risk, things will get really crazy, and there might be a growing constituency for doing something, So maybe alignment proponents could join a coalition to slow AI. We would have to wrangle algorithmic fairness proponents, anti-tech conservatives, organized labor, and anti-surveillance civil libertarians into the same tent, but “politics makes strange bedfellows”.

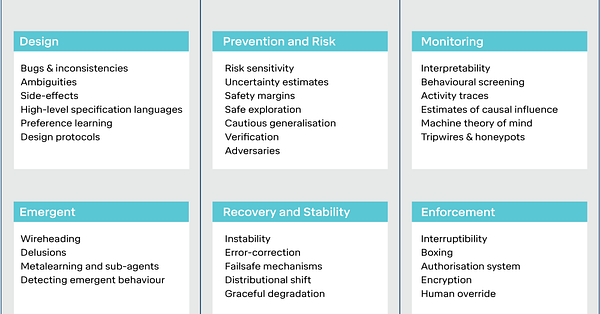

As far as I know these people aren’t part of our conspiracy, and have reached this level of stylishness and correctness entirely on their own. (h/t 80,000 Hours)

As far as I know these people aren’t part of our conspiracy, and have reached this level of stylishness and correctness entirely on their own. (h/t 80,000 Hours)

Or: what if we got the companies themselves on board? Big Pharma has a symbiotic relationship with the FDA; the biggest corporations hire lobbyists and giant legal departments, then use the government to crush less-savvy startup competitors. Regulations that boiled down to “only these three big tech companies can research AGI, and they have to do it really slowly and carefully” would satisfy the alignment community and delight the three big tech companies. As a sort-of-libertarian, I hate this; as a sort-of-utilitarian, if that’s what it takes then I will swallow my pride and go along.

Or: what about limits on something other than research? AIs need lots of training data (in some cases, the entire Internet). Whenever I post an article here, it’s going into some dataset that will one day help an AI write better toothpaste ads. What if I don’t want it to do that? Privacy advocates are already asking tough questions about data ownership; these kinds of rules could slow AI research without having to attack companies directly. As a sort of libertarian, I hate blah blah blah same story.

Or what about standards? Government-backed institutions like NIST and IEEE play a role in standardizing tech in ways that sometimes actually help the tech sector advance more productively in unison, e.g., cryptography standards and wifi standards. Sometimes standards set by one government have caught on and become international defaults. So safety standards might be an area where government backing could actually speed up the deployment of actually-safe-and-useful AI products.

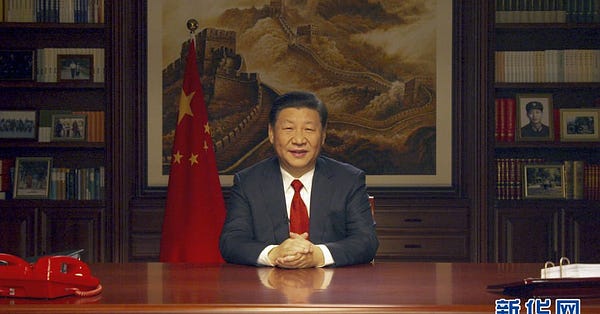

The biggest problem is China. US regulations don’t affect China. China says that AI leadership is a cornerstone of their national security - both as a massive boon to their surveillance state, and because it would boost their national pride if they could beat America in something so cutting-edge.

Xi Jinping reads “texts on understanding AI, AR, algorithms, and machine learning, including The Master Algorithm by Pedro Domingos and Augmented by Brett King.” He also likes to watch Black Mirror to get ideas and inspiration. 🤫 medium.com/shanghaiist/wh…

medium.comWhat’s new on Xi Jinping’s bookshelf this yearHe puts us all to shame[6:51 AM ∙ Jan 2, 2018

medium.comWhat’s new on Xi Jinping’s bookshelf this yearHe puts us all to shame[6:51 AM ∙ Jan 2, 2018

562Likes206Retweets](https://twitter.com/hardmaru/status/948084227546923009)

So the real question is: which would we prefer? OpenAI gets superintelligence in 2040? Or Facebook gets superintelligence in 2044? Or China gets superintelligence in 2048?

Might we be able to strike an agreement with China on AI, much as countries have previously made arms control or climate change agreements? This is . . . not technically prevented by the laws of physics, but it sounds really hard. When I bring this challenge up with AI policy people, they ask “Harder than the technical AI alignment problem?” Okay, fine, you win this one.

Cooperate / Defect?

Another way to think about this question is: right now there’s a sort of alliance between the capabilities companies and the alignment community. Should we keep it, or turn against them when they least expect?

The argument for keeping: the capabilities companies have been very nice to us. Many have AI safety teams. These aren’t just window-dressing. They do good work. The companies fund them well and give them access to the latest models, because the companies like AI safety. If AI safety declares war on AI capabilities, maybe we lose all these things.

But also: AI safety needs AI researchers. Most AI researchers who aren’t already in AI safety are undergrads, or grad students, or academics, or on open-source projects. Right now these people are neutral-to-positive about safety. If we declared war on AI companies - tried as hard as possible to prevent AI research - that might tank our reputation among AI researchers, who generally like AI research. What if they started thinking of us the way that Texas oilmen think of environmentalists - as a hostile faction trying to destroy their way of life? Maybe we could still convert a few to safety research, but we would be facing stronger headwinds.

And also: maybe the companies will work with us on stopgap solutions. I know one team trying to get everyone to agree to a common safety policy around publishing potentially dangerous results. The companies are hearing them out. If we need regulation, and want to go the “company-approved regulatory capture” route, we can work with the companies to help draft it. Demis Hassabis sometimes says that he might slow DeepMind down once they get close to superintelligence; maybe other friendly companies can be convinced to work with him on that.

Demis Hassabis is also the former world champion of the game Diplomacy, which means that trying to backstab him would be like trying to out-shoot Steph Curry.

Demis Hassabis is also the former world champion of the game Diplomacy, which means that trying to backstab him would be like trying to out-shoot Steph Curry.

The argument for betraying: pretty slim, actually. We would damage the companies closest to us, have a harder time damaging companies further away, and lose all of the advantages above.

The Plan Is To Come Up With A Plan

There’s a growing field called AI policy, typified by eg the Oxford-based Center For The Governance Of AI, working on plans for these questions.

Some of the people involved are great, and many are brilliant academics or hard-working civil servants. But whenever I ask them what the plan is, they say things like “I think somebody else is working on the plan,” or “Maybe the plan is a secret.” They have a really tough job, there are lots of reasons to be tight-lipped, and I respect everything they do - but I don’t get the sense that this is a field with many breakthroughs to show.

Jack Clark is a co-founder of Anthropic and used to be Head of Policy at OpenAI. His perspective is somewhat different than mine, but he is very knowledgeable and I recommend his thread (which you can read by clicking on the tweet above). No, there are not 1,602 spicy takes in the thread. The fact that an AI policy leader didn’t consider that his plan would become unworkable in an extreme scenario is probably a metaphor for something.

Jack Clark is a co-founder of Anthropic and used to be Head of Policy at OpenAI. His perspective is somewhat different than mine, but he is very knowledgeable and I recommend his thread (which you can read by clicking on the tweet above). No, there are not 1,602 spicy takes in the thread. The fact that an AI policy leader didn’t consider that his plan would become unworkable in an extreme scenario is probably a metaphor for something.

But if this interests you, you can read 80,000 Hours’ Guide To Working In AI Policy And Strategy and maybe get involved. If you ever figure out the plan, let me know.

Until then, we’re all just happy members of the Broader Fossil Fuel Community.

MIRI @MIRIBerkeleyA list from @ESYudkowsky of reasons AGI appears likely to cause an existential catastrophe, and reasons why he thinks the current research community — MIRI included — isn’t succeeding at preventing this from happening. https://t.co/hW4LRIAZuD

MIRI @MIRIBerkeleyA list from @ESYudkowsky of reasons AGI appears likely to cause an existential catastrophe, and reasons why he thinks the current research community — MIRI included — isn’t succeeding at preventing this from happening. https://t.co/hW4LRIAZuD hardmaru @hardmaru

hardmaru @hardmaru