In Continued Defense Of Effective Altruism

I.

Search “effective altruism” on social media right now, and it’s pretty grim.

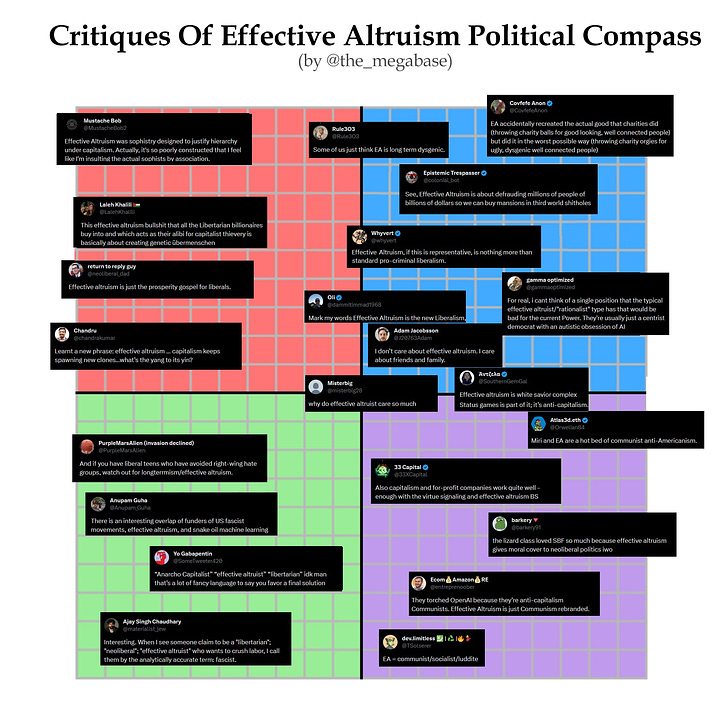

Socialists think we’re sociopathic Randroid money-obsessed Silicon Valley hypercapitalists.

But Silicon Valley thinks we’re all overregulation-loving authoritarian communist bureaucrats.

The right thinks we’re all woke SJW extremists.

But the left thinks we’re all fascist white supremacists.

The anti-AI people think we’re the PR arm of AI companies, helping hype their products by saying they’re superintelligent at this very moment.

But the pro-AI people think we want to ban all AI research forever and nationalize all tech companies.

The hippies think we’re a totalizing ideology so hyper-obsessed with ethics that we never have fun or live normal human lives.

But the zealots think we’re a grift who only pretend to care about about charity, while we really spend all of our time feasting in castles.

The bigshots think we’re naive children who fall apart at our first contact with real-world politics.

But the journalists think we’re a sinister conspiracy that has “taken over Washington” and have the whole Democratic Party in our pocket.

Click to expand. Source: https://twitter.com/the_megabase/status/1728771254336036963

Click to expand. Source: https://twitter.com/the_megabase/status/1728771254336036963

The only thing everyone agrees on is that the only two things EAs ever did were “endorse SBF” and “bungle the recent OpenAI corporate coup.”

In other words, there’s never been a better time to become an effective altruist! Get in now, while it’s still unpopular! The times when everyone fawns over us are boring and undignified. It’s only when you’re fighting off the entire world that you feel truly alive.

And I do think the movement is worth fighting for. Here’s a short, very incomplete list of things effective altruism has accomplished in its ~10 years of existence. I’m counting it as an EA accomplishment if EA either provided the funding or did the work, further explanations in the footnotes. I’m also slightly conflating EA, rationalism, and AI doomerism rather than doing the hard work of teasing them apart:

Global Health And Development

-

Saved about 200,000 lives total, mostly from malaria1

-

Treated 25 million cases of chronic parasite infection.2

-

Given 5 million people access to clean drinking water.3

-

Supported clinical trials for both the RTS.S malaria vaccine (currently approved!) and the R21/Matrix malaria vaccine (on track for approval)4

-

Supported additional research into vaccines for syphilis, malaria, helminths, and hepatitis C and E.5

-

Supported teams giving development economics advice in Ethiopia, India, Rwanda, and around the world.6

Animal Welfare:

- Convinced farms to switch 400 million chickens from caged to cage-free.7

Things are now slightly better than this in some places! Source: https://www.vox.com/future-perfect/23724740/tyson-chicken-free-range-humanewashing-investigation-animal-cruelty

Things are now slightly better than this in some places! Source: https://www.vox.com/future-perfect/23724740/tyson-chicken-free-range-humanewashing-investigation-animal-cruelty

-

Freed 500,000 pigs from tiny crates where they weren’t able to move around8

-

Gotten 3,000 companies including Pepsi, Kelloggs, CVS, and Whole Foods to commit to selling low-cruelty meat.

_AI: _

-

Developed RLHF, a technique for controlling AI output widely considered the key breakthrough behind ChatGPT.9

-

…and other major AI safety advances, including RLAIF and the foundations of AI interpretability10.

-

Founded the field of AI safety, and incubated it from nothing up to the point where Geoffrey Hinton, Yoshua Bengio, Demis Hassabis, Sam Altman, Bill Gates, and hundreds of others have endorsed it and urged policymakers to take it seriously.11

-

Helped convince OpenAI to dedicate 20% of company resources to a team working on aligning future superintelligences.

-

Gotten major AI companies including OpenAI to work with ARC Evals and evaluate their models for dangerous behavior before releasing them.

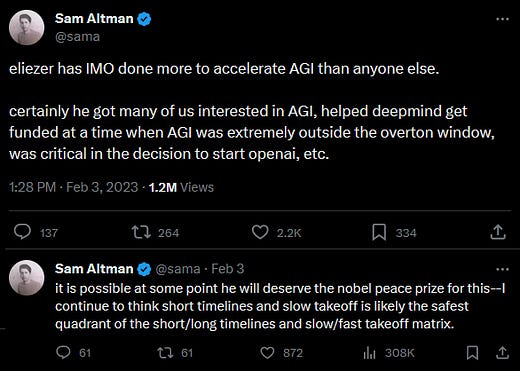

I don’t exactly endorse this Tweet, but it is . . . a thing . . . someone has said.

I don’t exactly endorse this Tweet, but it is . . . a thing . . . someone has said.

-

Got two seats on the board of OpenAI, held majority control of OpenAI for one wild weekend, and still apparently might have some seats on the board of OpenAI, somehow?12

-

Helped found, and continue to have majority control of, competing AI startup Anthropic, a $30 billion company widely considered the only group with technology comparable to OpenAI’s.13

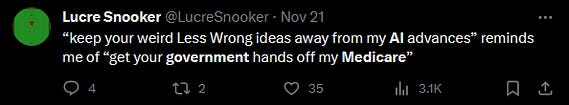

I don’t exactly endorse and so on.

I don’t exactly endorse and so on.

-

Become so influential in AI-related legislation that Politico accuses effective altruists of having “[taken] over Washington” and “largely dominating the UK’s efforts to regulate advanced AI”.

-

Helped (probably, I have no secret knowledge) the Biden administration pass what they called “the strongest set of actions any government in the world has ever taken on AI safety, security, and trust.”

-

Helped the British government create its Frontier AI Taskforce.

-

Won the PR war: a recent poll shows that 70% of US voters believe that mitigating extinction risk from AI should be a “global priority”.

Other:

-

Helped organize the SecureDNA consortium, which helps DNA synthesis companies figure out what their customers are requesting and avoid accidentally selling bioweapons to terrorists14.

-

Provided a significant fraction of all funding for DC groups trying to lower the risk of nuclear war.15

-

Donated a few hundred kidneys.16

-

Sparked a renaissance in forecasting, including major roles in creating, funding, and/or staffing Metaculus, Manifold Markets, and the Forecasting Research Institute.

-

Donated tens of millions of dollars to pandemic preparedness causes years before COVID, and positively influenced some countries’ COVID policies.

-

Played a big part in creating the YIMBY movement - I’m as surprised by this one as you are, but see footnote for evidence17.

I think other people are probably thinking of this as par for the course - all of these seem like the sort of thing a big movement should be able to do. But I remember when EA was three philosophers and few weird Bay Area nerds with a blog. It clawed its way up into the kind of movement that could do these sorts of things by having all the virtues it claims to have: dedication, rationality, and (I think) genuine desire to make the world a better place.

II.

Still not impressed? Recently, in the US alone, effective altruists have:

-

ended all gun violence, including mass shootings and police shootings

-

cured AIDS and melanoma

-

prevented a 9-11 scale terrorist attack

Okay. Fine. EA hasn’t, technically, done any of these things.

But it has saved the same number of lives that doing all those things would have.

About 20,000 Americans die yearly of gun violence, 8,000 of melanoma, 13,000 from AIDS, and 3,000 people in 9/11. So doing all of these things would save 44,000 lives per year. That matches the ~50,000 lives that effective altruist charities save yearly18.

People aren’t acting like EA has ended gun violence and cured AIDS and so on. all those things. Probably this is because those are exciting popular causes in the news, and saving people in developing countries isn’t. Most people care so little about saving lives in developing countries that effective altruists can save 200,000 of them and people will just not notice. “Oh, all your movement ever does is cause corporate boardroom drama, and maybe other things I’m forgetting right now.”

In a world where people thought saving 200,000 lives mattered as much as whether you caused boardroom drama, we wouldn’t need effective altruism. These skewed priorities are the exact problem that effective altruism exists to solve - or the exact inefficiency that effective altruism exists to exploit, if you prefer that framing. Nobody cares about preventing pandemics, everyone cares about whether SBF was in a polycule or not. Effective altruists will only intersect with the parts of the world that other people care about when we screw up; therefore, everyone will think of us as “those guys who are constantly screwing up, and maybe do other things I’m forgetting right now”.

And I think the screwups are comparatively minor. Allying with a crypto billionaire who turned out to be a scammer. Being part of a board who fired a CEO, then backpedaled after he threatened to destroy the company. These are bad, but I’m not sure they cancel out the effect of saving onelife, let alone 200,000 (see #57 here)

(Somebody’s going to accuse me of downplaying the FTX disaster here. I agree FTX was genuinely bad, and I feel bad for the people who lost money. But I think this proves my point: in a year of nonstop commentary about how effective altruism sucked and never accomplished anything and should be judged entirely on the FTX scandal, nobody ever accused those people of downplaying the 200,000 lives saved. The discourse sure does have its priorities.)

Doing things is hard. The more things you do, the more chance that one of your agents goes rogue and you have a scandal. The Democratic Party, the Republican Party, every big company, all major religions, some would say even Sam Altman - they all have past deeds they’re not proud of, or plans that went belly-up. I think EA’s track record of accomplishments vs. scandals is as good as any of them, maybe better. It’s just that in our case, the accomplishments are things nobody except us notices or cares about. Like saving 200,000 lives. Or ending the torture of hundreds of millions of animals. Or preventing future pandemics. Or preparing for superintelligent AI.

But if any of these things do matter to you, you can’t help thinking that all those people on Twitter saying EA has never done anything except lurch from scandal to scandal are morally insane. That’s where I am right now. Effective altruism feels like a tiny precious cluster of people who actually care about whether anyone else lives or dies, in a way unmediated by which newspaper headlines go viral or not. My first, second, and so on to hundredth priorities are protecting this tiny cluster and helping it grow. After that I will grudgingly admit that it sometimes screws up - screws up in a way that is nowhere near as bad as it’s good to end gun violence and cure AIDS and so - and try to figure out ways to screw up less. But not if it has any risk of killing the goose that lays the golden eggs, or interferes with priorities 1 - 100.

III.

Am I cheating by bringing up the 200,000 lives too many times?

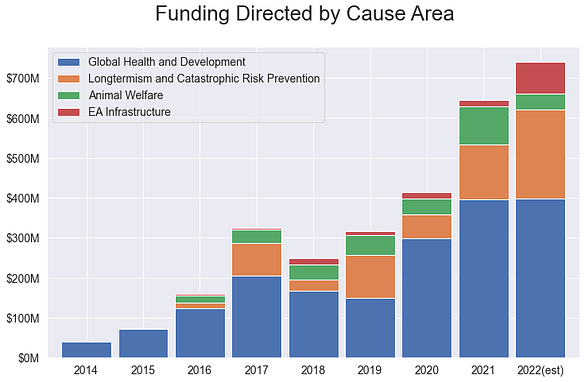

People like to say things like “effective altruism is just a bunch of speculative ideas about animal rights and the far future, the stuff about global health is just a distraction”.

If you really believe that, you should be doubly amazed! We managed to cure AIDS and prevent 9/11 and so on as a distraction , when it wasn’t even the main thing we wanted to be doing! We said “on the way to doing the other things we really care about, let’s stop for a second to cure AIDS and prevent 9/11, it won’t take too much time or resources away from the important stuff”. Why haven’t any of you distraction-free people managed that?

I don’t think any of this is a distraction. The Democratic Party is anti-gun and pro-choice. The gun control isn’t a ruse to trick pro-life people into joining the party, and the abortion rights aren’t a smokescreen to deflect opposition from gun owners. Movements are coalitions. Sometimes this means different people with different priorities choose to work together. But other times it means the same people tend to share both priorities. People who are pro-choice are usually also anti-gun, and vice versa.

And I notice that the tiny handful of people capable of caring about 200,000 people dying of neglected tropical diseases are the same tiny handful of people capable of caring about the next pandemic, or superintelligence, or human extinction. I think the common skill is trying to analyze what causes are important logically. Surprisingly, this comes up with different results than the usual strategy of caring about whatever is trending on Twitter today! Some of those results are broadly popular, like saving 200,000 lives. Other results are broadly unpopular, like worrying about AI risk. But both sets of results come from the same process, and the people who get them are natural allies in a world where most people can’t bring themselves to care about anything that isn’t current front-page news.

Which of these is “the thing EAs really do”? Which are the distractions and bait-and-switches? Source: https://forum.effectivealtruism.org/posts/ZbaDmowkXbTBsxvHn/historical-ea-funding-data

Which of these is “the thing EAs really do”? Which are the distractions and bait-and-switches? Source: https://forum.effectivealtruism.org/posts/ZbaDmowkXbTBsxvHn/historical-ea-funding-data

I don’t want the takeaway from this post to be “Sure, you may hate EA because it does a lot of work on AI - but come on, it also does a lot of work on global health and poverty!” I’m proud of all of it. I believe - just like Geoff Hinton, Bill Gates, Sam Altman, Daniel Dennett, etc - that there’s a pretty serious risk of human extinction from AI over the next few decades. I can’t imagine believing this and not thinking it’s important to prepare for. EA has done a great job working on this (see list of accomplishments above), and I think the AI and x-risk people have just as much to be proud of as the global health and animal welfare people.

So my recommendation is that you look carefully into the research on what causes can most benefit the world - near-term, long-term, whatever seems most important to you after some honest study - and try to come up with some way to help them. If you want, take the Giving What We Can pledge to donate 10% of your income, or look at 80,000 Hours to see how you can get an altruistic career.

And whatever you do, do it quick, before the metronome swings back and all of this becomes popular again.

-

Source: AMF says 185,000 deaths prevented here; GiveWell’s evaluation makes this number sound credible. AMF reports revenue of $100M/year and GiveWell reports giving them about $90M/year, so I think GiveWell is most of their funding and it makes sense to think of them as primarily an EA project. GiveWell estimates that Malaria Consortium can prevent one death for $5,000, and EA has donated $100M/year for (AFAICT) several years, so 20,000 lives/year times some number of years. I have rounded these two sources combined off to 200,000. As a sanity check, malaria death toll declined from about 1,000,000 to 600,000 between 2000 and 2015 mostly because of bednet programs like these, meaning EA-funded donations in their biggest year were responsible for about 10% of the yearly decline. This doesn’t seem crazy to me given the scale of EA funding compared against all malaria funding.

-

Source: this page says about $1 to deworm a child. There are about $50 million worth of grants recorded here, and I’m arbitrarily subtracting half for overhead. As a sanity check, Unlimit Health, a major charity in this field, says it dewormed 39 million people last year (though not necessarily all with EA funding). I think the number I gave above is probably an underestimate. The exact effects of deworming are controversial, see this link for more. Most of the money above went to deworming for schistosomiasis, which might work differently than other parasites. See GiveWell’s analysis here.

-

Source: this page. See “Evidence Action says Dispensers for Safe Water is currently reaching four million people in Kenya, Malawi, and Uganda, and this grant will allow them to expand that to 9.5 million.” Cf the charity’s website, which says it costs $1.50 per person/year. GiveWell’s grant is for $64 million, which would check out if the dispensers were expected to last ~10 years.

-

RTS,S sources here and here; R21 source here; given this page I think it is about R21.

-

See here. I have no idea whether any of this research did, or will ever, pay off.

-

Ethiopia source here and here, India source here, Rwanda source here.

-

Estimate for number of chickens here. Their numbers add up to 800 million but I am giving EA half-credit because not all organizations involved were EA-affiliated. I’m counting groups like Humane League, Compassion In World Farming, Mercy For Animals, etc as broadly EA-affiliated, and I think it’s generally agreed they’ve been the leaders in these sorts of campaigns.

-

Discussion here. That link says 700,000 pigs; this one says 300,000 - 500,000; I have compromised at 500,000. Open Phil was the biggest single donor to Prop 12.

-

The original RLHF paper was written by OpenAI’s safety team. At least two of the six authors, including lead author Paul Christiano, are self-identified effective altruists (maybe more, I’m not sure), and the original human feedbackers were random volunteers Paul got from the rationalist and effective altruist communities.

-

I recognize at least eight of the authors of the RLAIF paper as EAs, and four members of the interpretability team, including team lead Chris Olah. Overall I think Anthropic’s safety team is pretty EA focused.

-

Open Philanthropy Project originally got one seat on the OpenAI board by supporting them when they were still a nonprofit; that later went to Helen Toner. I’m not sure how Tasha McCauley got her seat. Currently the provisional board is Bret Taylor, Adam D’Angelo, and Larry Summers. Summers says he “believe[s] in effective altruism” but doesn’t seem AI-risk-pilled. Adam D’Angelo has never explicitly identified with EA or the AI risk movement but seems to have sided with the EAs in the recent fight so I’m not sure how to count him.

-

The founders of Anthropic included several EAs (I can’t tell if CEO Dario Amodei is an EA or not). The original investors included Dustin Moskowitz, Sam Bankman-Fried, Jaan Tallinn, and various EA organizations. Its Wikipedia article says that “Journalists often connect Anthropic with the effective altruism movement”. Anthropic is controlled by a board of trustees, most of whose members are effective altruists.

-

See here, Open Philanthropy is first-listed funder. Leader Kevin Esvelt has spoken at EA Global conferences and on 80,000 Hours

-

Total private funding for nuclear strategy is $40 million. Longview Philanthropy has a nuclear policy fund with two managers, which suggests they must be doing enough granting to justify their salaries, probably something in the seven digits. Council on Strategic Risks says Longview gave them a $1.6 million grant, which backs up “somewhere in the seven digits”. Seven digits would mean somewhere between 2.5% and 25% of all nuclear policy funding.

-

I admit this one is a wild guess. I know about 5 EAs who have donated a kidney, but I don’t know anywhere close to all EAs. Dylan Matthews says his article inspired between a dozen and a few dozen donations. The staff at the hospital where I donated my kidney seemed well aware of EA and not surprised to hear it was among my reasons for donating, which suggests they get EA donors regularly. There were about 400 nondirected kidney donations in the US per year in 2019, but that number is growing rapidly. Since EA was founded in the early 2010s, there have probably been a total of ~5000. I think it’s reasonable to guess EAs have been between 5 - 10% of those, leading to my estimate of hundreds.

-

Open Philanthropy’s Wikipedia page says it was “the first institutional funder for the YIMBY movement”. The Inside Philanthropy website says that “on the national level, Open Philanthropy is one of the few major grantmakers that has offered the YIMBY movement full-throated support.” Open Phil started giving money to YIMBY causes in 2015, and has donated about $5 million, a significant fraction of its total funding.

-

Above I say about 200,000 lives total, but that’s heavily skewed towards recently since the movement has been growing. I got the 50,000 lives number by GiveWell’s total money moved for last year divided by cost-effectiveness, but I think it matches well with the 200,000 number above.