Mantic Monday 2/19/24

Manifold has lots of bots. There’s a Silicon League entirely for bots. Lots of bots make lots of money:

Most of these bots are boring. They’re bots programmed to automatically buy some market once the price gets low enough, or to arbitrage basically-identical markets, or do some other technical finance maneuver.

But you could imagine more interesting bots. Ones that forecasts the same way humans forecast. You could imagine a bot based on ChatGPT that asks “What is the probability of a cease-fire in Ukraine this year?” and bets on ChatGPT’s answer.

And by “you could imagine” I mean “there’s now a Humans Vs. Bots tournament on Manifold with an ℳ250,000 prize”

Let’s see how they’re doing:

All of these bots seem to be making small profits, with GPT in the lead. But what’s this?

The Nermit bot is based on FutureSearch.ai, a new company trying to build an AI-based forecaster. Based on their own internal calculations, they claim success:

How is this1 possible?

Some studies of superforecasters converge on the same technique: figure out a base rate for some event, then alter it based on the current situation. For example, if you wanted to know the chance of a cease-fire in Ukraine over the next year, you might start by plotting the distribution of war lengths over the past century, then check how many wars that had lasted at least two years had a cease-fire in the third. Then you might adjust a little bit down for factors like “there haven’t been any promising peace talks yet” and “the two sides seem equally balanced”.

FutureSearch’s AI tries to do something similar. It prompts itself with questions like

-

“What would be a good reference class for this question?”

-

“Okay, now estimate the base rate in that reference class”

-

“What are the most important recent news articles about this topic?”

-

“How do those suggest the probability might be higher or lower than the base rate?”

-

“Putting it all together, what do you think the probability is?”

This doesn’t sound to me like it would work. But FutureSearch says it does. They let me test their AI model. I tried four questions:

Will Nikki Haley win the 2028 presidential election? Answer: 10%

Will Israel launch airstrikes on Pakistan in the next year? Answer: 1%

Will an AI be top 3 in Math Olympiad in the next three years? Answer: 25%

Will Prospera have 10,000 residents on 1/1/2026? Answer: 35%

All of these seem like plausible answers except the one on Prospera, which I would have placed more like 5%. Here’s what the AI has to say for itself:

And here’s an example of what’s doing inside one of these subpoints (in this case, subpoint one about special economic zones with significant foreign investment):

55% of SEZs with significant investment reached population 10,000 after seven years, so that’s its base rate for this method.

On the one hand, this is very impressive. It knows what Prospera is. It correctly identified the main factor threatening its growth (the ongoing legal case with Honduras). It came up with some really interesting base rates, calculated them, and weighted them for relevance. All of this is great.

But I don’t see it doing what would be the obvious first step for me, which is finding the current population of Prospera and how fast it’s growing. Plausibly this is because there are no news stories about this, and you sort of have to estimate it by following how many apartments are being built there and how much is happening (my guess is current population 400, growing to 1,200 by 2026).

I talked to a FutureSearch representative, who agreed that it dropped the ball on this question, but pointed out that if prompted slightly differently, it gives a better answer:

- How many residents will live in Prospera, a new special economic zone in Honduras, on Jan 1, 2026? Answer: 600 (80% confidence interval 100-2,000)

This seems like a good guess (except that my confidence interval would have included zero because there’s a 20%+ chance that it gets shut down).

So overall its forecasts seem pretty impressive. But I was concerned by its reasoning even in some of the questions it got “right”. For example, the Nikki Haley question tried to get a base rate by asking what percent of elections Haley had won before, and found she had won 71% of them - these were mostly elections for South Carolina governor. You can see what the AI is trying to do - but it’s not going to work. Then it got confused and read a lot of news stories about how she’s currently losing the 2024 presidential election, and seemed to think they were about 2028. So either the AI only got a reasonable probability by coincidence, or it was testing many different strategies, throwing out the useless ones, and updating only on the useful ones, in a way that was kind of opaque to the casual reader.

Still, if the company says it beats most human forecasters, this doesn’t seem totally impossible based on what I’ve seen.

And that would be exciting! An AI that can generate probabilistic forecasts for any question seems like in some way a culmination of the rationalist project. And if you can make something like this work, it doesn’t sound too outlandish that you could apply the same AI to conditional forecasts, or to questions about the past and present (eg whether COVID was a lab leak). I would be most excited if at some point this graduated from its geopolitical focus and was able to answer questions on any topic (eg “what is the chance that Astral Codex Ten gains paid subscribers this year?”), maybe if the questioner gives it links or feeds it some of the appropriate information.

FutureSearch is run by a team formerly from Metaculus, including former Metaculus CTO (and Google internal prediction market veteran) Dan Schwarz. They’re looking for potential clients and/or investors; if you’re interested, email hello@futuresearch.ai.

Vitalik On AI Prediction Markets

Vitalik Buterin, Ethereum-founder-turned-cryptocurrency-public-intellectual, has a blog post on The Promise And Challenge Of Crypto + AI Applications. One of them is a prediction market. He writes:

Prediction markets have been a holy grail of epistemics technology for a long time; I was excited about using prediction markets as an input for governance (“futarchy”) back in 2014, and played around with them extensively in the last election as well as more recently. But so far prediction markets have not taken off too much in practice, and there is a series of commonly given reasons why: the largest participants are often irrational, people with the right knowledge are not willing to take the time and bet unless a lot of money is involved, markets are often thin, etc.

One response to this is to point to ongoing UX improvements in Polymarket or other new prediction markets, and hope that they will succeed where previous iterations have failed. After all, the story goes, people are willing to bet tens of billions on sports, so why wouldn’t people throw in enough money betting on US elections or LK99 that it starts to make sense for the serious players to start coming in? But this argument must contend with the fact that, well, previous iterations have failed to get to this level of scale (at least compared to their proponents’ dreams), and so it seems like you need something new to make prediction markets succeed. And so a different response is to point to one specific feature of prediction market ecosystems that we can expect to see in the 2020s that we did not see in the 2010s: the possibility of ubiquitous participation by AIs.

AIs are willing to work for less than $1 per hour, and have the knowledge of an encyclopedia - and if that’s not enough, they can even be integrated with real-time web search capability. If you make a market, and put up a liquidity subsidy of $50, humans will not care enough to bid, but thousands of AIs will easily swarm all over the question and make the best guess they can. The incentive to do a good job on any one question may be tiny, but the incentive to make an AI that makes good predictions in general may be in the millions. Note that potentially, you don’t even need the humans to adjudicate most questions : you can use a multi-round dispute system similar to Augur or Kleros, where AIs would also be the ones participating in earlier rounds. Humans would only need to respond in those few cases where a series of escalations have taken place and large amounts of money have been committed by both sides.

This is a powerful primitive, because once a “prediction market” can be made to work on such a microscopic scale, you can reuse the “prediction market” primitive for many other kinds of questions:

Is this social media post acceptable under [terms of use]?

What will happen to the price of stock X (eg. seeNumerai)

Is this account that is currently messaging me actually Elon Musk?

Is this work submission on an online task marketplace acceptable?

Is the dapp at https://examplefinance.network a scam?

Is

0x1b54....98c3actually the address of the “Casinu Inu” ERC20 token?

I imagine a very successful version of this as a search engine for the future. Like, FutureSearch (above) is pretty cool, but why should you trust them? How do you know they’re better than their AI competitors? Better than humans? Instead, imagine a search engine where you can pay 0.01 ETH to ask a question like “Will Prospera have a population of at least 10,000 people by 1/1/2026?” Your money goes to subsidize a prediction market, and within a few seconds (!) several competing forecasting bots will make their prediction. Then the search engine will return a result for you, and in 2026 some moderator AI will check the news and auto-resolve the question.

(this would work even better if you could add some humans in the loop who made money by betting against the forecasting bots on the questions where common sense tells you they’re farthest off - but then you’d have to wait human timescales instead of seconds, and pay human wages instead of peanuts)

And as Vitalik mentions, it doesn’t have to be about the future. It can be about any question you’re not sure about, as long as there’s eventually some way to resolve it.

Does this beat a centralized AI predictor? That depends whether you trust the centralized AI predictor or not! Remember, the biggest advantage of prediction markets isn’t necessarily accuracy, it’s trust and canonicity.

Bet On Love

This was a Manifold promotional event for Valentine’s Day, taking the form of a “prediction market dating show” where six contestants competed to win a date with local celebrity Aella. It was not what I was expecting.

I’m going to include a video embed in a moment, but fair warning: if you already hate any of rationalists, San Francisco, tech, prediction markets, polyamory, betting, love, reality shows, or Aella, this will definitely make you hate them more.

I’m including it in this post because . . . well, you know how sometimes Christian apologists say that the Gospel writers must have been telling the truth, because they included parts of the story that were embarrassing to them and to their fledgling movement? I’m including this video so future historians will know I must be telling the truth about everything:

Still, the production is incredible and everyone involved is really talented. I was especially impressed by the fake CEO Duncan Horst (whose name is a pseudo-Spoonerism for “drunken host”).

I have to admit, as some kind of journalist-adjacent person who writes a monthly column on prediction markets, plus an early funder, I feel like I should be one of the best-qualified people in the world to understand what Manifold is. I’m not sure I do. They’re a prediction market company . . . that also runs a dating site . . . and has a philanthropy arm that moves millions of dollars to charitable causes . . . and puts on some kind of extremely well-done genre-bending reality show slash off-Broadway musical?

My current understanding of what happened: there’s a group called the “postrationalists” who were vaguely inspired by rationalist writings but also think the emphasis on facts is boring and autistic and we need to focus more on creativity/friendship/woo/intuition/vibes. They have a gathering called VibeCamp where they do artsy stuff. Someone from Manifold went to a theater production by a postrat group called “The Classics Department”, liked them, and hired them to make a promotional event for Manifold. They are sort of rationalists and prediction market junkies, but also sort of making fun of rationalists and prediction market junkies. Hence this show.

This Month In The Markets

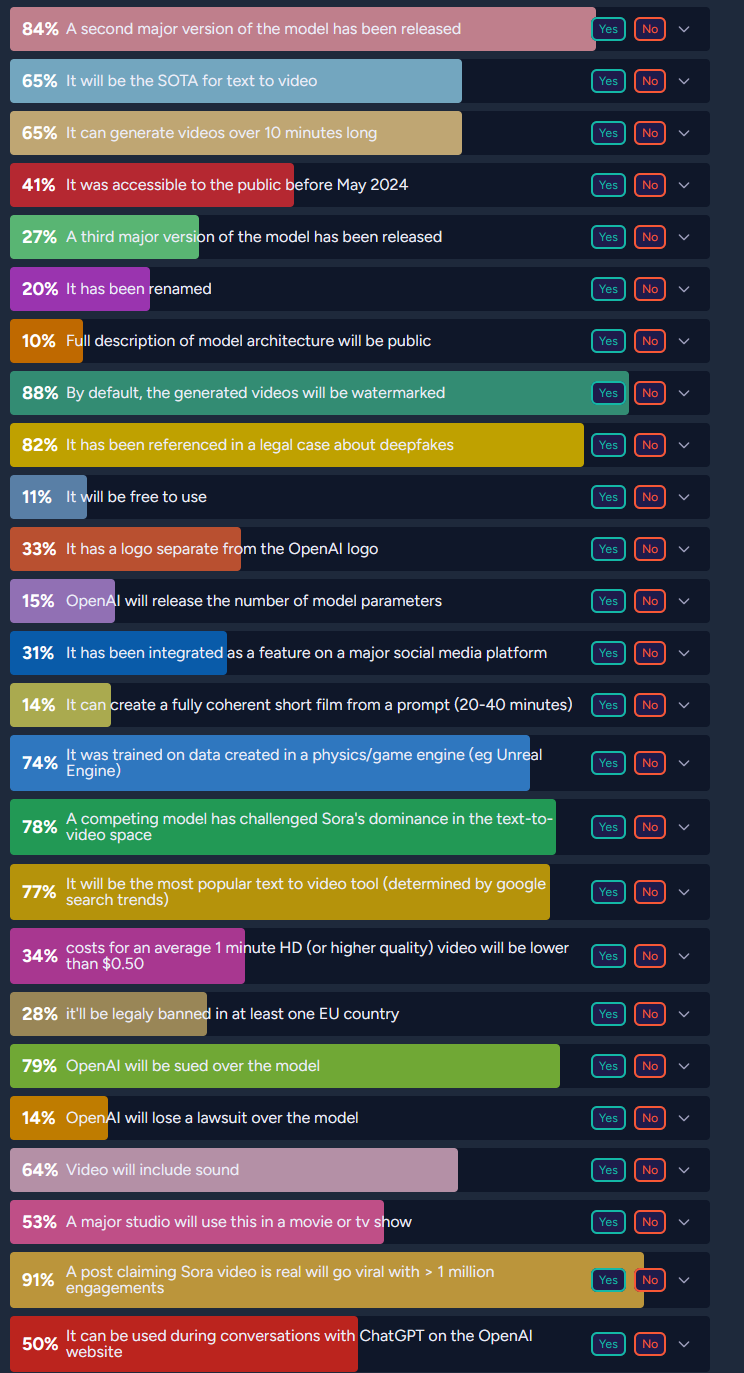

The big AI excitement this month was around OpenAI’s Sora text-to-video engine. Here’s what forecasters thought would be true of it by end 2025:

You can see many more (including “It was jailbroken and used to make porn: 54%”) at the link.

And one year ago, I asked Manifold whether we would have a text-to-video AI capable of creating a pretty good full-length motion picture by 2028. Here’s how the release of Sora affected the market:

…it jumped from about 30% to about 40%.

(I don’t know why it went down so much from late 2023 to early 2024.)

Metaculus asks the same question and forecasts that AI will be able to make feature films by 2030:

The dumbest possible way to do this is to ask GPT-4 to write a summary (“write the summary of a plot for a detective mystery story”), then ask it to convert the summary into a 100-point outline, then convert that into 100 minutes of a 100-minute movie, then ask Sora to generate each one-minute block. This wouldn’t work as written now (I don’t think Sora can do sound, it wouldn’t keep actors and style consistent unless you forced it), but it seems like something that requires incremental improvement rather than a grand breakthrough.

Some politics topics courtesy of Polymarket. Look at those amounts of money!

See here for more information.

This one will only be interesting for other people interested in California’s water situation. Snowpack is a major determinant of how much water eventually makes it into reservoirs for the year and whether we get nagged about drought or not. Here’s median and past years for context, looks like this year is a bit low.

Short Links

1: Vox: How A Ragtag Group Of Friends Became The Best At Forecasting World Events. Profile of Samotsvety, the world’s leading forecasting team.

2: TIME: Looking For Love? Let The Market Decide. Profile of Manifold.love

3: Speaking of which, Manifold.love announces their new structure; normal use is free, but for $100 they’ll get you a “guaranteed three dates” by subsidizing a prediction market on who you should go on dates with (if you don’t go on dates with anyone, they’ll refund you the $100).

4: A DAO (a type of crypto organization) claims to be implementing futarchy. It’s less clear what they’re implementing futarchy to do, but I think it’s some kind of trading in Solana (a cryptocurrency).

5: Swift Centre has a forecasting piece on the US elections; spiciest claim is that “replacing Biden won’t help the Democrats”. I notice that this isn’t exactly what the question says - it says the Democrats’ chances are better if Biden is the candidate on Election Day than if he isn’t. But this could be because the Democrats are more likely to replace Biden in a world where things aren’t going their way. I don’t know if this is because they simplified the question in their article, or if this is actually a (slight) mistake.

6: Base Rate Times has a list of the Twitter accounts of ~40 superforecasters and other great predictors.

7: The INFER forecasting platform is now being led by RAND, suggesting that important people affiliated with the government are starting to take more interest.

On our accuracy chart, we should emphasize that these are very preliminary results, and the methodology has two important caveats:

It compares to current crowd forecasts, so we can score now without waiting for resolutions. It uses historical Metaculus predictions to infer how well humans would do, by computing the average Brier score Metaculus users got for predictions made when the Community Prediction was around a given value. More technically, we draw resolutions from the crowd forecast distribution, and compare the expected value of our Brier score to that of Metaculus users.

Across Metaculus, Manifold, Good Judgment, Infer, Kalshi, and Polymarket, we only found 55 questions with large crowds on good geopolitics questions about 2024. Also, this eval only scores binary questions for the same reason - we didn’t find enough continuous questions to get statistical power. (Part of the motivation for the Humans vs. Bots tournament was to double our sample size on binary questions, but we haven’t run new evals on them yet.)

-

From FutureSearch: